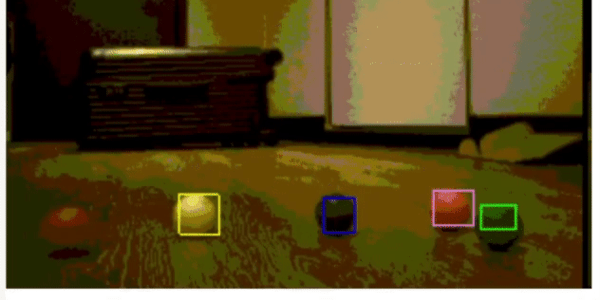

For the Nintendo aficionados of the 90s, the Super Game Boy was a must-have cartridge for the Super Nintendo which allowed gamers to play Game Boy games on your TV. Not only did it allow four-color dot-matrix gaming on the big screen, but it let you play those favorite Game Boy titles without spending a fortune on AA batteries. While later handhelds like the PSP or even Nintendo Switch are able to output video directly to TVs without issue, the original Game Boy needed processing help from an SNES or, as [Andy West] shows us, it can also get that help from a modern microcontroller.

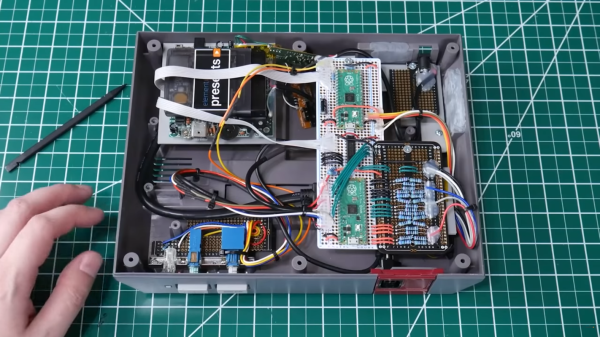

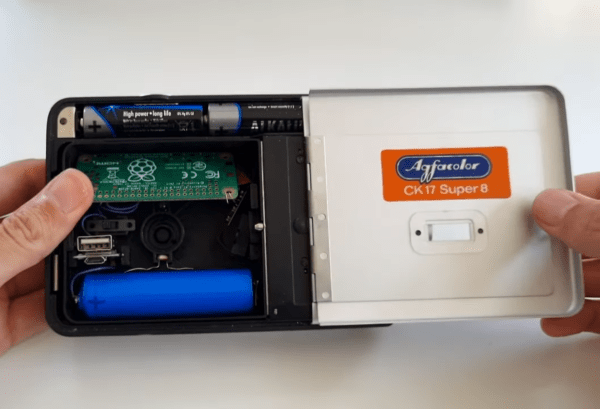

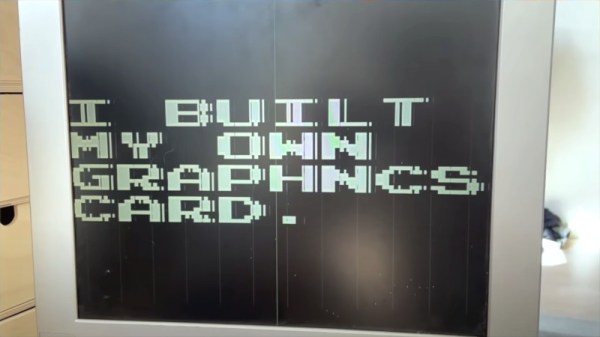

The extra processing power in this case comes from a Raspberry Pi Pico which is small enough to easily fit inside of a donor NES case and also powerful enough to handle the VGA directly. For video data input, the Pico is connected to the video pins on the Game Boy’s main board through a level shifter. The main board is also connected to a second Pico which handles the controller input from an NES controller. Some fancy conversion needed to be done at this point because although the controller layouts are very similar, they are handles by the respective consoles completely differently.

With all of the technical work largely out of the way, [Andy] was able to put the finishing touches on the build. These included making sure the power buttons, status LEDs, and reset button all functioned, and restoring the NES case complete with some custom “Game Guy” graphics to match the original design of the Game Boy. We commend the use of original Game Boy hardware in this build as well, which even made it possible for [Andy] and his wife to play a head-to-head game of Dr. Mario through a link cable with another Game Boy. If you’re looking for a simpler way of playing on original hardware without burning a hole in your wallet buying AA batteries, take a look at this Game Boy restoration which uses a Lithium battery instead.

Continue reading “Game Boy Becomes Super Game Boy With A Pair Of Pis”