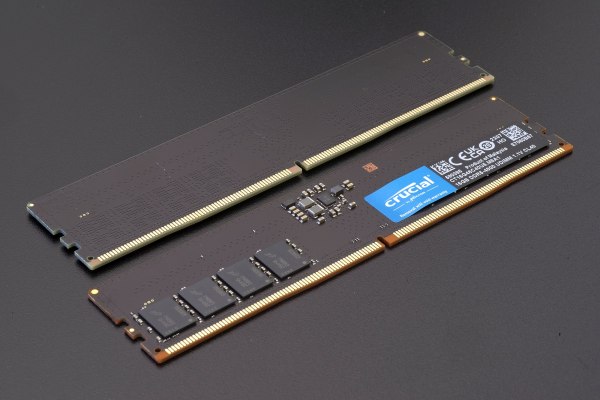

As DDR SDRAM increases in density and speed, so too do new challenges and opportunities appear. In the recent DDR5 update by JEDEC – as reported by Anandtech – we see not only a big speed increase from the previous maximum of 6800 Mbps to 8800 Mbps, but also the deprecation of Partial Array Self Refresh (PASR) due to security concerns, and the introduction of Per-Row Activation Counting (PRAC), which should help with row hammer-related (security) implications.

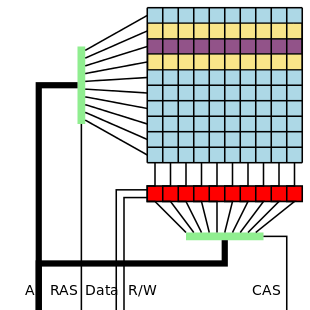

Increasing transfer speeds is primarily a matter of timings within the limits set by the overall design of DDR5, while the changes to features like PASR and PRAC are more fundamental. PASR is mostly a power-saving feature, but can apparently be abused for nefarious means, which is why it’s now gone. As for PRAC, this directly addresses the issue of row hammer attacks. Back in the 2014-era of DDR3, row hammer was mostly regarded as a way to corrupt data in RAM, but later it was found to be also a way to compromise security and effect exploits like privilege escalation.

The way PRAC seeks to prevent this is by keeping track of how often a row is being accessed, with a certain limit after which neighboring memory cells get a chance to recover from the bleed-over that is at the core of row hammer attacks. All of which means that theoretically new DDR5 RAM and memory controllers should be even faster and more secure, which is good news all around.