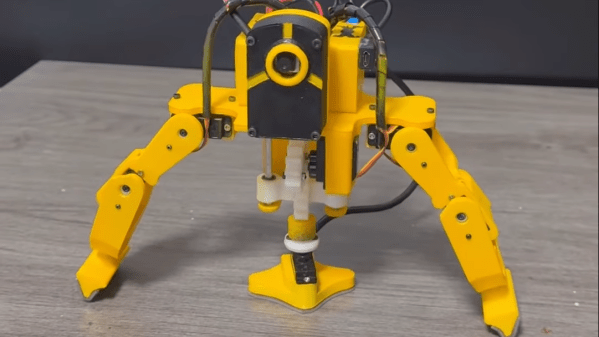

[hannu_hell] created Dodge as a “novel design of tripod.” It’s a small robotic device quite unlike anything else we’ve seen of late. It’s intended to be a self-mobile camera platform that can move itself around to capture footage as needed.

Dodge is essentially a two-legged robot with a large flat “foot” in the center. When stationary, it rests on this flat foot. When it needs to move, it can raise this center foot and rest on its two outside legs. If Dodge needs to move, it can crab back and forth in a line with these two legs. If it wants to turn, it can return to resting on its center foot, and pivot about its central axis. It can thus rotate itself and use its two outer legs to move further as needed.

Dodge does all this while carrying an ESP32 Cam module. The idea is that it’s a small mobile tripod platform with a live camera feed. It reminds us of various small monitoring robots from cartoons and anime.

Ultimately, it’s an interesting take on robot locomotion. Rather than walking with two legs or four legs and dynamic stability, it takes full advantage of static stability instead.

We’ve seen some wild roboticized camera rigs over the years. Video after the break.