[Koraks tinkers] was gifted a gargantuan photographic enlarger, a Durst Laborator 138 s, which is a unit designed specifically for black and white usage only. This was not good enough for [Koraks] so down the rabbit hole of conversion to colour we go! The moral of the story is this: if you can’t find it, build it. The hacker mentality. After wasting time and effort trying to source a period colour head for the thing, [Koraks] did the decent thing and converted what was already in front of them.

Now, if you’re thinking this process is simply a matter of ripping out the tungsten bulb and sticking a high-power RGB array in there, then you’re going to be disappointed! You see, colour photography of the era — specifically the RA4 process in this case — requires careful colour calibration and is heavily biased towards the red end of the visible spectrum, due to the colour curve of those tungsten bulbs we touched upon earlier.

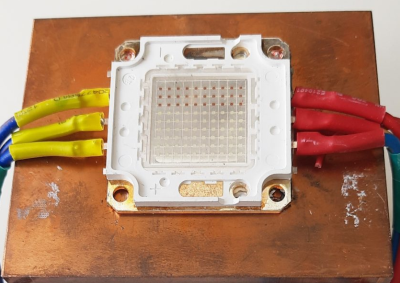

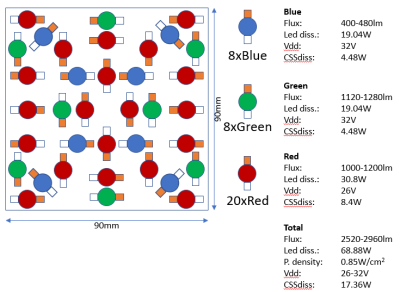

The first attempt at using an off-the-shelf COB array was a bust — it simply wasn’t bright enough once the light had passed through the diffuser plate, and the light path losses were too high to expose the RA4 paper sufficiently, especially at the red end of the spectrum. Quite simply this is due to the reduced energy of red photons (compared to blue) making the desired chemical reaction rate too low. The solution is more power.

Another issue that quickly raised itself was that 8-bits of PWM control of the RGB components was inadequate since the ratio of blue to red required was so skewed, that only a few effective bits of blue channel control were usable, and that was far too granular to get the necessary accuracy.

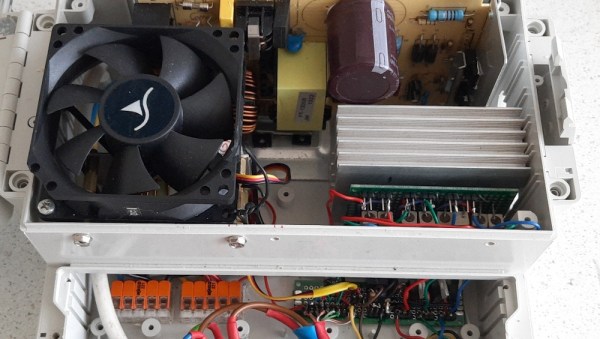

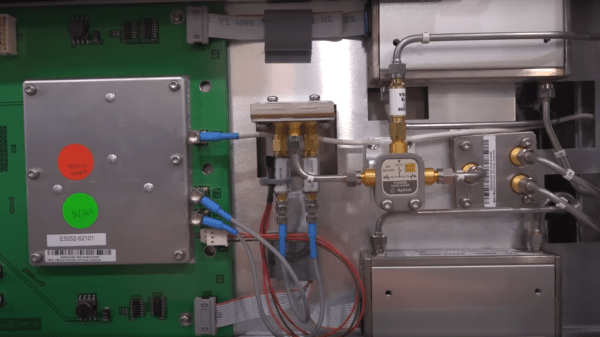

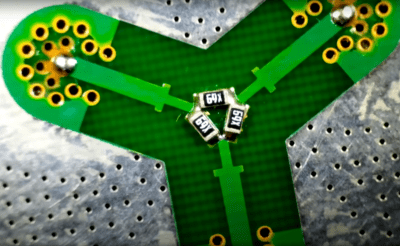

[Koraks’] approach was to custom build an LED array with twenty red 3W LEDs and eight each of the green and blue devices. 12-bits of PWM resolution was delivered via a PCA9685 PWM controller, that also handily controlled the cooling fans. The whole thing was hooked up to an Arduino Nano, with an MCP23016 expander board performing the duty of interfacing the rotary encoders and trigger footswitch. In fact, several iterations of the LED array have been constructed and this four-part blog series (Part1, Part2, Part3, Part4) lays out the whole story in all its gory detail for your entertainment. Enjoy!

COB LED arrays are pretty nifty, checkout turning them into 7-segment displays, just because. If all you want is raw power, we reckon that 100W “should be enough for anyone…”

Thanks [macsimski] for the tip!

Update: Corrected the article header from ‘exposer head’ to ‘enlarger’ for clarity at the request of the project author.

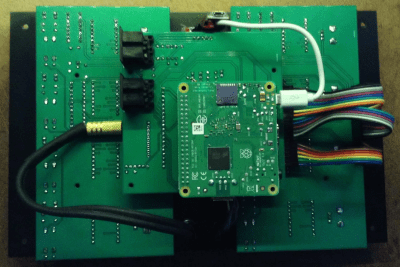

Pico. Also sitting on this PCB is the

Pico. Also sitting on this PCB is the

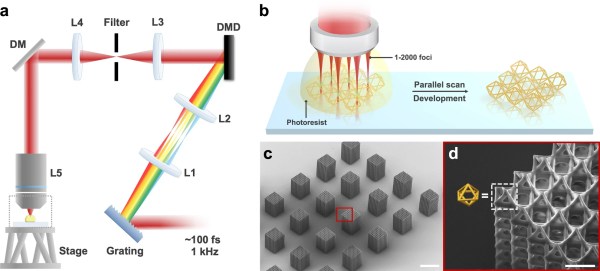

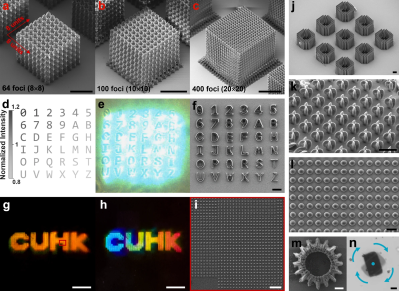

photopolymer resin, which is chemically tweaked to make it sensitive to the UV frequency photons. This is all fine, but as we know, this method is slow and can be of limited resolution, and has been largely superseded by LCD technology. Recent research has focussed on

photopolymer resin, which is chemically tweaked to make it sensitive to the UV frequency photons. This is all fine, but as we know, this method is slow and can be of limited resolution, and has been largely superseded by LCD technology. Recent research has focussed on