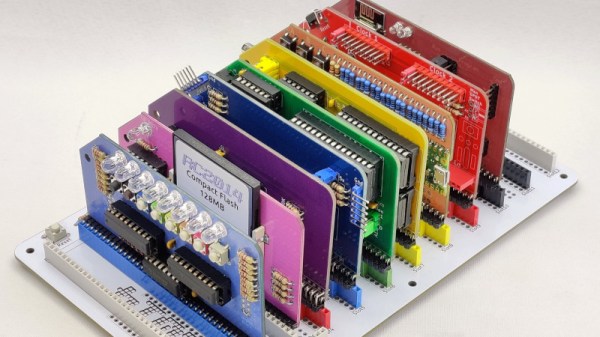

We all love PCB artwork, but those who create it work under the restriction of having a limited color palette to work with. If it’s not some combination of board, plating, solder mask, and silk screen, then it can’t easily be rendered on a conventional PCB. That’s not the end of the story though, because it’s technically possible to print onto a PCB and have it any color you like. Is it difficult? Read [Spencer]’s experience creating a rainbow Pride version of his RC2014 modular retrocomputer.

Dye-sublimation printing uses an ink that vaporizes at atmospheric pressure without a liquid phase, so a solid ink is heated and the vapor condenses back to solid on the surface to be printed. Commercial dye-sub printers are expensive, but there’s a cheaper route in the form of an Epson printer that can be converted. This in turn prints onto a transfer paper, from which the ink is applied to the PCB in a T-shirt printing press.

[Spencer] took the advice of creating boards with all-white silkscreen applied, and has come up with a good process for creating the colored boards. There is still an issue with discoloration from extra heat during soldering, so he advises in the instructions for the kit to take extra care. It remains however a fascinating look at the process, and raises the important point that it’s now within the reach of perhaps a hackerspace.

Regular readers will know we’ve long held an interest in the manufacture of artistic PCBs.