[Handy Geng] lives in Baoding, China, where average winter temperatures can get as low as −7.7 °C (18.1 °F). Rather than simply freezing in the cold when using the bathroom, he decided he could do better. Thus came about his rather unique toilet paper heating system.

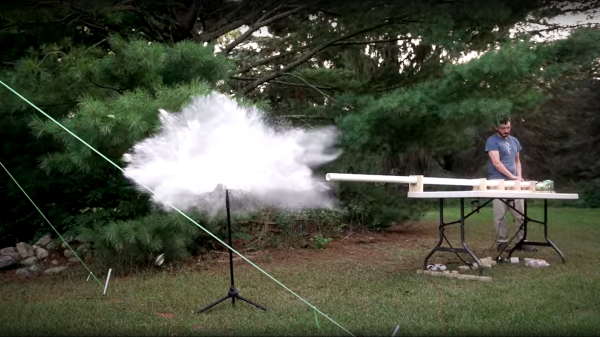

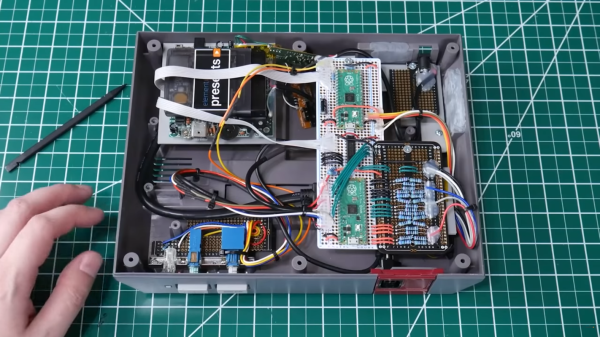

The build uses a gas burner heating up a wok. Toilet paper is fed into the wok body via motorized rollers salvaged from what appears to be an old counterfeit money detector. The wok is then shaken by a second motor in order to more evenly heat the toilet paper within. The burner can then be turned off, and the lid of the wok opened in order to gain access to the toasty toilet paper.

The system heats the toilet paper to a scorching 75 °C (167 °F); a little too warm to comfortably touch, but thankfully toilet paper doesn’t have a lot of thermal mass so cools off relatively quickly. It’s also thankfully well below the auto-ignition temperature of paper of 451 °F.

It’s a noisy, clanging machine that nonetheless provides the same warmth and comfort that you’re probably more familiar with from the office photocopier. It’s not [Handy Geng]’s first bathroom hack, either. Video after the break.

Continue reading “Toilet Paper Warmer Is A Unique Chinese Luxury”