Breakfast is a meal fraught with paradoxes. It’s important to start the day with a hearty meal full of energy and nutrition, but it’s also difficult to cook when you’re still bleary-eyed and half asleep. As with many problems in life, automation is the answer. [James Bruton] has the rig that will boil your egg and get your day off to a good start.

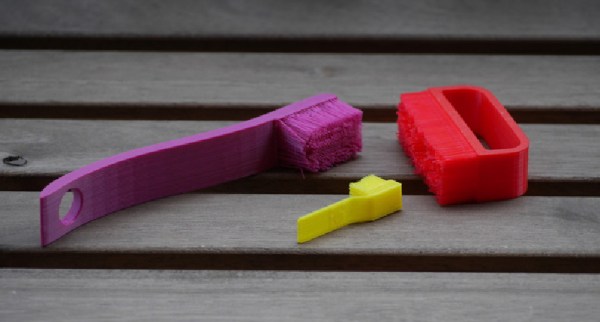

The basic apparatus uses a thermostatically controlled hotplate to heat a pot of water. [James] then employs an encoder-controlled linear actuator from a previous project to raise and lower a mesh colander into the pot, carrying the egg. An Arduino is used to measure the water temperature, only beginning the cooking process once the temperature is over 90 degrees Celsius. At this point, a 6-minute timer starts, with the egg being removed from the water and dumped out by a servo-controlled twist mechanism.

Future work will include servo control of the hotplate’s knob and building a chute to catch the egg to further reduce the need for human intervention. While there’s some danger in having an automated hotplate on in the house, this could be synchronized with an RTC to ensure your boiled egg is ready on time, every day.

Breakfast machines are a grand tradition around these parts, and we’ve seen a few in our time. Video after the break.

[Thanks to Baldpower for the tip!]