You’re hit by the global IC shortage, reduced to using stone knives and bearskins, but you still want to make something neat? It’s time to revisit BEAM robots.

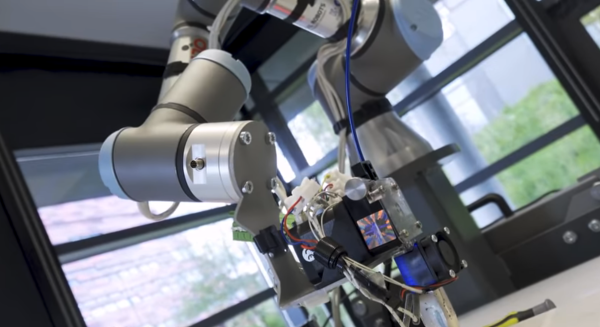

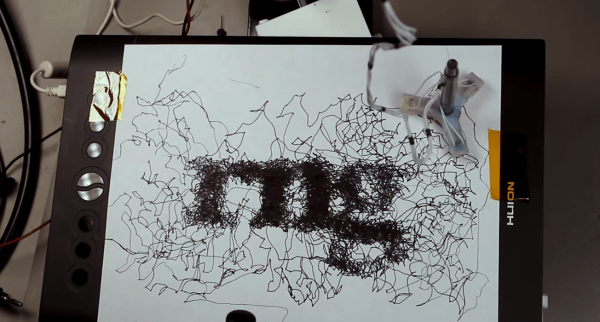

Biology, electronics, aesthetics, and mechanics — Mark Tilden came up with the idea of minimalist electronic creatures that, through inter-coupled weak control systems and clever mechanical setups, could mimic living bugs. And that’s not so crazy if you think about how many nerves something like a cockroach or an earthworm have. Yet their collection of sensors, motors, and skeletons makes for some pretty interesting behavior.

Biology, electronics, aesthetics, and mechanics — Mark Tilden came up with the idea of minimalist electronic creatures that, through inter-coupled weak control systems and clever mechanical setups, could mimic living bugs. And that’s not so crazy if you think about how many nerves something like a cockroach or an earthworm have. Yet their collection of sensors, motors, and skeletons makes for some pretty interesting behavior.

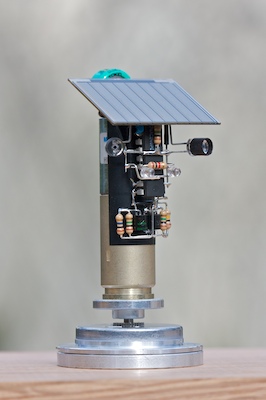

My favorite BEAM bots have always been the solar-powered ones. They move slowly or infrequently, but also inexorably, under solar power. In that way, they’re the most “alive”. Part of the design trick is to make sure they stay near their food (the sun) and don’t get stuck. One of my favorite styles is the “photovore” or “photopopper”, because they provide amazing bang for the buck.

Back in the heyday of BEAM, maybe 15 years ago, solar cells were inefficient and expensive, circuits for using their small current were leaky, and small motors were tricky to come by. Nowadays, that’s all changed. Power harvesting circuits leak only nano-amps, and low-voltage MOSFETs can switch almost losslessly. Is it time to revisit the BEAM principles? I’d wager you’d put the old guard to shame, and you won’t even need any of those newfangled microcontroller thingies, which are out of stock anyway.

If you make something, show us!