We all know, at least intellectually, that our computers are all built with lots of tiny transistors. But beyond that it’s a little hard to describe. They’re printed on a silicon wafer somehow, and since any sufficiently advanced technology is indistinguishable from magic, they miraculously create a large part of modern society. Even most computers from 40 or 50 years ago were built around various inscrutable integrated circuits. On the other hand, this computer goes all the way back to first principles and implements a complete processor out of individual transistors instead.

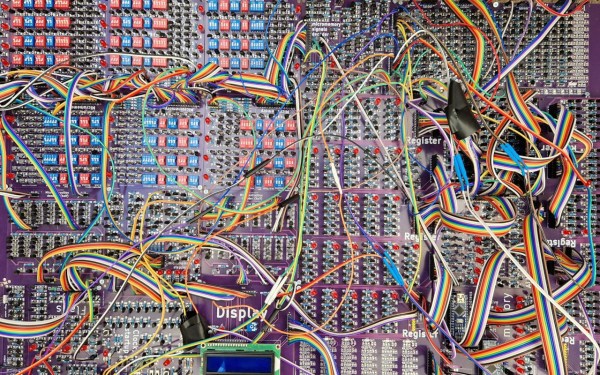

The transistor computer uses over 2000 individual transistors to implement everything comprising the 11-bit CPU. The creator, Reddit user [ Weekly_Salamander_78] also has an online interactive book that walks through each of the steps that is required to get to the point of having a working computer like this. Starting with a guide on building logic gates from transistors it will eventually cover the arithmetic logic unit, adders, memory, clocks, and everything else that is needed for the complete CPU to get up and running. The design does rely on an Arduino for memory to simplify some things, and in the end it’s able to run a Hello, World! program and play a simple dinosaur game as well.

Building a computer out of discrete components like this is an impressive accomplishment, although we might not envy the creator of it when it comes time for troubleshooting or maintenance of all of those individual components. Presumably it would be much easier to work on than something like a relay computer, but for now we’ll all take a moment to be thankful that almost no one needs to work on debugging vacuum tube computers anymore.