Most Chrome users will have come across a neat little Easter egg when their Internet connection has gone down – a game known as “T-Rex” where a dinosaur must be jumped over cactii. Whether or not this is accurate in terms of the evolutionary timeline, it’s a bit of fun, and Volos Projects educator [Danko Bertović] decided to port the game to the ESP32.

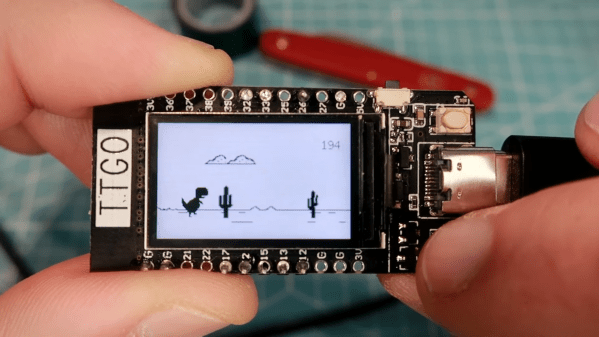

The game runs on the LILYGO TTGO T-Display development board, which pairs the powerful microcontroller with a 1.14-inch color LCD. His clone goes as far as authentically replicating the “No Internet” page from within Google Chrome, before kicking into the game at the press of a tactile button.

The game is built using a sprite-based engine, which enables gameplay with a minimum of flickering on the screen. Transparency is included to stop the sprites from occluding other screen elements unnecessarily. [Danko] hasn’t yet released a full tutorial on using sprites on the ESP32, but code is available for your own digestion.

It’s not the first time we’ve seen [Danko]’s ESP32 games, as he’s developed a few over the years. Others have gone so far as coding 8-bit emulators for the platform. Video after the break.