From Ferraris to F-16s, some things just look fast. This Rubik’s Cube solving robot not only looks fast, it is fast: it solved a standard cube in 380 milliseconds. Blink during the video below and you’ll miss it — even on the high-speed we had trouble keeping track of the number of moves this solution took. It looked like about 20.

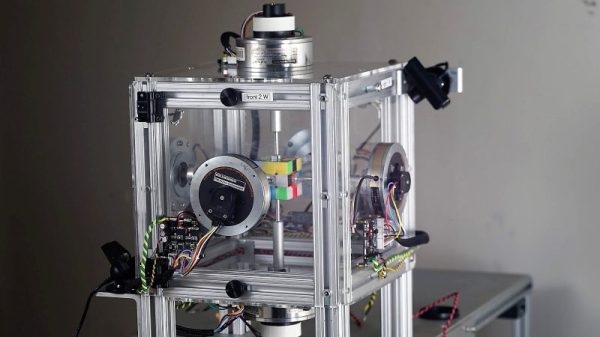

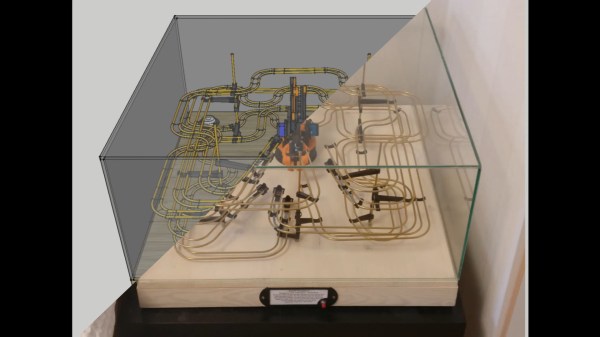

Beating the previous robot record of 637 milliseconds is just the icing on the cake of a very cool build undertaken by [Ben Katz]. He and his collaborator [Jared] put together a robot with a decidedly industrial look — aluminum extrusion chassis, six pancake servo motors with high-precision optical encoders, and polycarbonate panels for explosion containment which proved handy during development. The motors had to be modified to allow the encoders to be attached to the rear, and custom motor controllers were fabricated. [Jared] came up with a unique board to synchronize the six motors and prevent collisions between faces. Machine vision is provided by just two PlayStation Eye cameras; mounted at opposite corners of the enclosure, each camera can see three faces at a time. They had a little trouble distinguishing the red from the orange, which was solved with a Sharpie.

[Ben] and [Jared] think they can shave a few milliseconds here and there with tweaks, but even as it is, this is a great lesson in optimization and integration. We’ve covered Rubik’s robots before, like this two-motor slow and steady design and this six-motor build that solves a cube in less than a second.

Continue reading “Rubik’s Robot So Fast It Looks Like A Glitch In The Matrix”

This is an older project, but the electromechanical solution used to create

This is an older project, but the electromechanical solution used to create