Building exoskeletons for people is a rapidly growing branch of robotics. Whether it’s improving the natural abilities of humans with added strength or helping those with disabilities, the field has plenty of room for new inventions for the augmentation of humans. One of the latest comes to us from a team out of the University of Chicago who recently demonstrated a method of adding brakes to a robotic glove which gives impressive digital control (PDF warning).

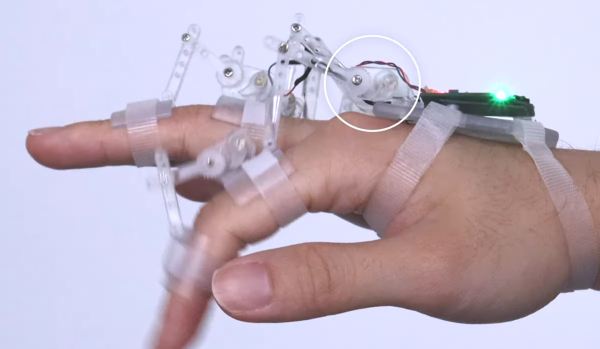

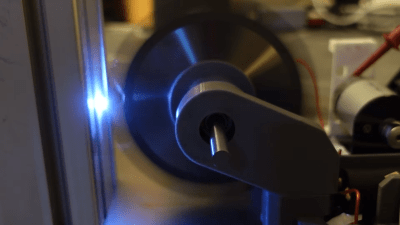

The robotic glove is known as DextrEMS but doesn’t actually move the fingers itself. That is handled by a series of electrodes on the forearm which stimulate the finger muscles using Electrical Muscle Stimulation (EMS), hence the name. The problem with EMS for manipulating fingers is that the precision isn’t that great and it tends to cause oscillations. That’s where the glove comes in: each finger includes a series of ratcheting mechanisms that act as brakes which can position the fingers precisely enough to make intelligible signs in sign language or even play a guitar or piano.

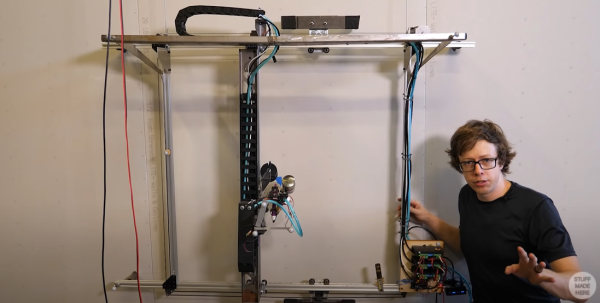

For anyone interested in robotics or exoskeletons, the white paper is worth a read. Adding this level of precision to an exoskeleton that manipulates something as small as the fingers opens up a brave new world of robotics, but if you’re looking for something that operates on the scale of an entire human body, take a look at this full-size strength-multiplying exoskeleton that can help you lift superhuman amounts of weight.