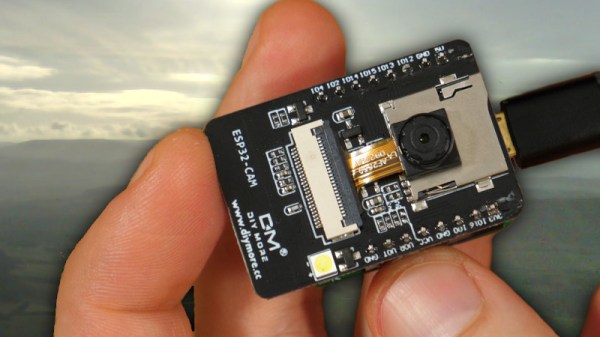

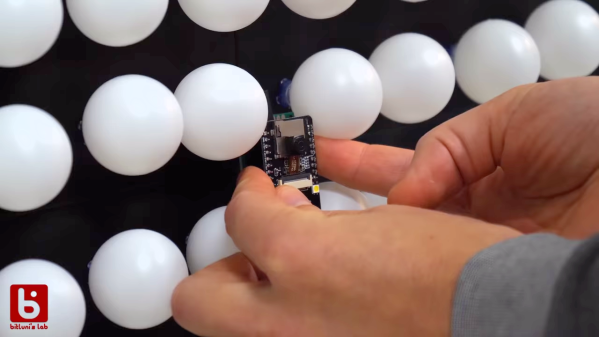

Just a few years ago, had someone asked you how much a digital camera with WiFi would cost, you probably wouldn’t have said $6. But that’s about how much [Bitluni] paid for an ESP32-CAM. He wanted to try making the little camera do time lapse, and it turns out that’s pretty easy to do.

Of course, the devil is in the details. The camera starts out needing configuration on the USB interface and that enables the set up of Arduino integration and WiFi configuration. Because it stores each frame of the image on an SD card, the board can’t take rapid-fire pictures. [Bitluni] reports a 3-second delay was about the shortest he could manage, but for most purposes, he was using at least ten seconds.

The program has a live preview window to help you set up the shot, but before your recordings start that should be turned off so as not to overload the little processor and the I/O buses. The result is a bunch of JPG images that you can easily convert to a video on a PC if you wish.

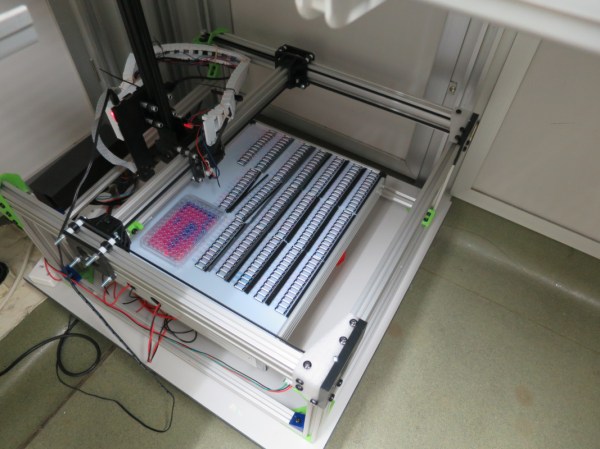

This might be a good way to fit a camera on a 3D printer, especially if the time lapse effect was desired. Otherwise, you might sync to a layer change. Now all [bitluni] needs is an orbital rig.