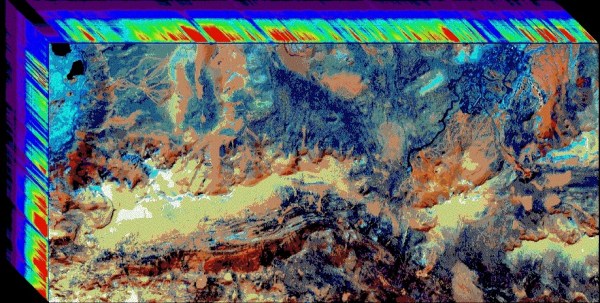

Large format photography gives a special quality to the images it produces, due to the differences in depth of field and resolution between it and its more modern handheld equivalents. Projecting an image the size of a dinner plate rather than a postage stamp has a few drawbacks though when it comes to digital photography, sensor manufacturersdo not manufacture consumer products at that size.

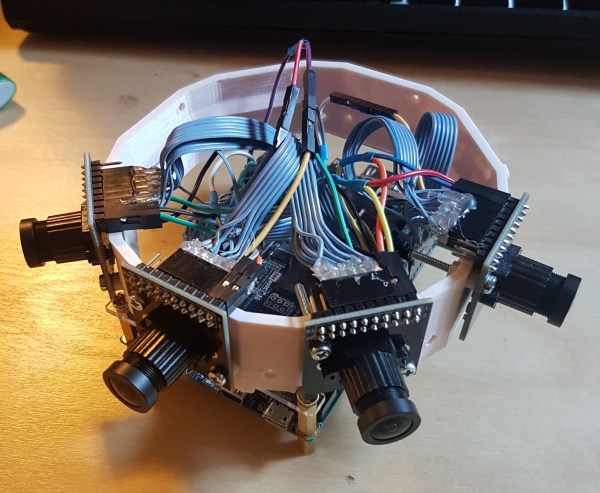

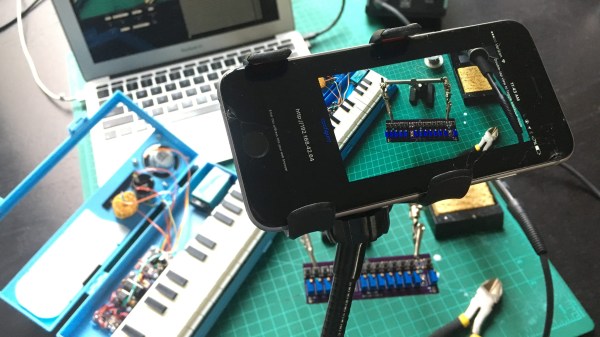

[Zev Hoover] has created a large format digital camera, and is using it not only for still images but for video. And it’s an interesting device, for the way he’s translated a huge large-format image into a relatively small sensor in a modern SLR. He’s projecting the image from the large-format lens and bellows onto a screen made from an artist’s palette, a conveniently available piece of bright white plastic, and capturing that image with his SLR mounted beneath the large-format lens assembly. This would normally cause a perspective distortion, but to correct that he’s mounted his SLR lens at an offset.

He does point out that since less light reaches the camera there is also a change in the ISO setting on the camera, but once that has been taken into account it performs satisfactorily. The result is a camera that allows something rather unusual, for Victorian-style large-format images to come to life as video. He demonstrates it in the video below, complete with friends in suitably old-fashioned looking steampunk attire.

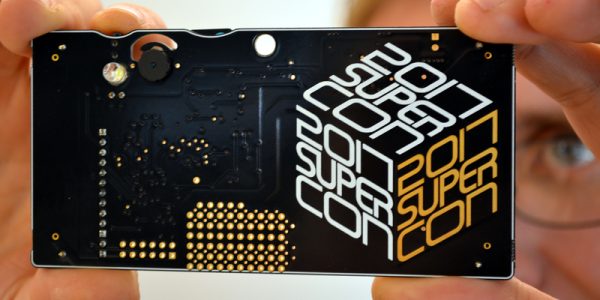

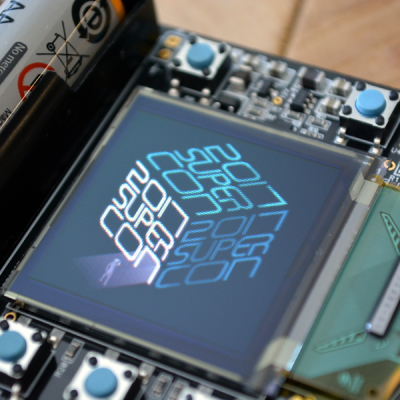

The Superconference is this weekend, and a few hundred Hackaday hackers will get their hands on this lump of open hardware. Something fantastic is certainly going to happen. If you couldn’t make it but still want to play along, now’s your chance!

The Superconference is this weekend, and a few hundred Hackaday hackers will get their hands on this lump of open hardware. Something fantastic is certainly going to happen. If you couldn’t make it but still want to play along, now’s your chance!