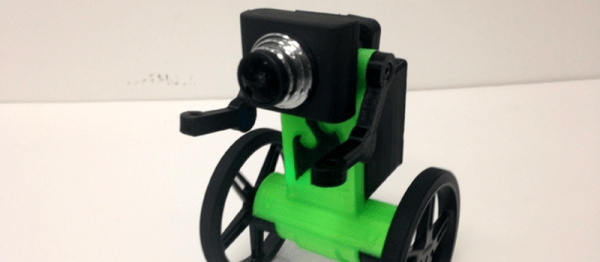

Eddie is a surprisingly capable tiny balancing robot based around the Intel Edison from which it takes its name.

Eddie’s frame is 3D printed and comes in camera and top hat editions. The camera edition provides space for a webcam to be mounted, since the Edison has enough go power to do basic vision. The top hat edition just lets you 3D print a tiny top hat for the robot.

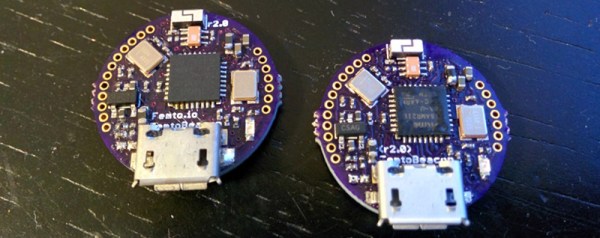

The electronics are based around the Edison board and Sparkfun’s set of, “Blocks” designed for it. This project needs the battery block, the H-Bridge block, the GPIO block, and the USB block along with a 9DOF block for balancing. It’s, somewhat unfortunately, not a cheap robot. The motors are Pololu all-metal gearmotors with hall-effect sensors acting as encoders.

We’re really impressed with [diabetemonster]’s design and documentation on the robot. Full source code is provided along with a very nice build guide to get the platform going fast.

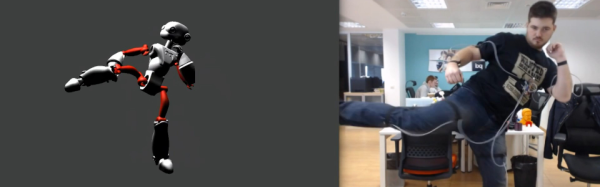

There are a few videos of it in action, available after the break. They show it handling situation such as a load being placed on the robot and slopes as well as bonus features like dancing and remote control.

Continue reading “Eddie The Balance Bot” →