If you’re currently living outside of a Spanish-speaking country, it’s possible you’ve only heard of the music genre Reggaeton in passing, if at all. In places with large Spanish populations, though, it would be more surprising if you hadn’t heard it. It’s so popular especially in the Carribean and Latin America that it’s gotten on the nerves of some, most notably [Roni] whose neighbor might not do anything else but listen to this style of music, which can be heard through the walls. To solve the problem [Roni] is now introducing the Reggaeton-Be-Gone. (Google Translate from Spanish)

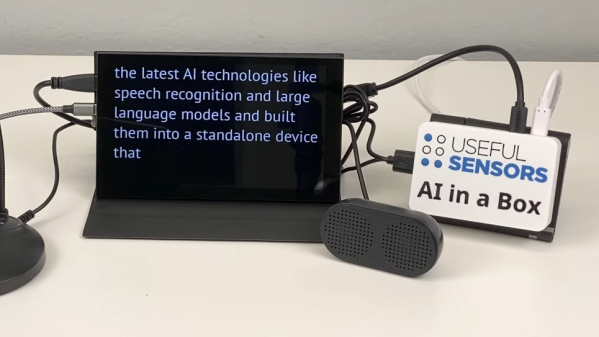

Inspired by the TV-B-Gone devices which purported to be able to turn off annoying TVs in bars, restaurants, and other places, this device can listen to music being played in the surrounding area and identify whether or not it is hearing Reggaeton. It does this using machine learning, taking samples of the audio it hears and making decisions based on a trained model. When the software, running on a Raspberry Pi, makes a positive identification of one of these songs, it looks for Bluetooth devices in the area and attempts to communicate with them in a number of ways, hopefully rapidly enough to disrupt their intended connections.

In testing with [Roni]’s neighbor, the device seems to show promise although it doesn’t completely disconnect the speaker from its host, instead only interfering with it enough for the neighbor to change locations. Clearly it merits further testing, and possibly other models trained for people who use Bluetooth speakers when skiing, hiking, or working out. Eventually the code will be posted to this GitHub page, but until then it’s not the only way to interfere with your neighbor’s annoying stereo.

Thanks to [BaldPower] and [Alfredo] for the tips!