Controlling an e-bike is pretty straightforward. If you want to just let it rip, it’s a no-brainer — or rather, a one-thumber, as a thumb throttle is the way to go. Or, if you’re still looking for a bit of the experience of riding a bike, sensing when the pedals are turning and giving the rider a boost with the motor is a good option.

But what if your e-conveyance is more of the aquatic variety? That’s an interface design problem of a different color, as [Braden Sunwold] has discovered with his DIY e-kayak. We’ve detailed his work on this already, but for a short recap, his goal is to create an electric assist for his inflatable kayak, to give you a boost when you need it without taking away from the experience of kayaking. To that end, he used the motor and propeller from a hydrofoil to provide the needed thrust, while puzzling through the problem of building an unobtrusive yet flexible controller for the motor.

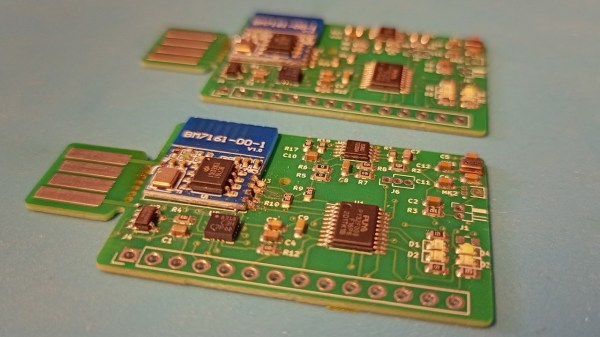

His answer is to mount an inertial measurement unit (IMU) in a waterproof container that can clamp to the kayak paddle. The controller is battery-powered and uses an nRF link to talk to a Raspberry Pi in the kayak’s waterproof electronics box. The sensor also has an LED ring light to provide feedback to the pilot. The controller is set up to support both a manual mode, which just turns on the motor and turns the kayak into a (low) power boat, and an automatic mode, which detects when the pilot is paddling and provides a little thrust in the desired direction of travel.

The video below shows the non-trivial amount of effort [Braden] and his project partner [Jordan] put into making the waterproof enclosure for the controller. The clamp is particularly interesting, especially since it has to keep the sensor properly oriented on the paddle. [Braden] is working on a machine-learning method to analyze paddle motions to discern what the pilot is doing and where the kayak goes. Once he has that model built, it should be time to hit the water and see what this thing can do. We’re eager to see the results.

Continue reading “AI Kayak Controller Lets The Paddle Show The Way”