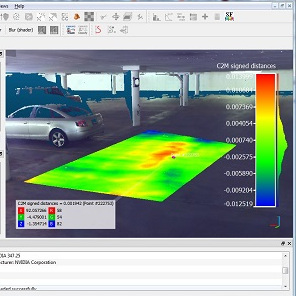

It’s sometimes useful for a system to not just have a flat 2D camera view of things, but to have an understanding of the depth of a scene. Dual RGB cameras can be used to sense depth by contrasting the two slightly different views, in much the same way that our own eyes work. It’s considered an economical but limited method of depth sensing, or at least it was before FoundationStereo came along and blew previous results out of the water. That link has a load of interactive comparisons to play with and see for yourself, so check it out.

The FoundationStereo paper explains how researchers leveraged machine learning to create a system that can not only outperform existing dual RGB camera setups, but even active depth-sensing cameras such as the Intel RealSense.

FoundationStereo is specifically designed for strong zero-shot performance, meaning it delivers useful general results with no additional training needed to handle any particular scene or environment. The framework and models are available from the project’s GitHub repository.

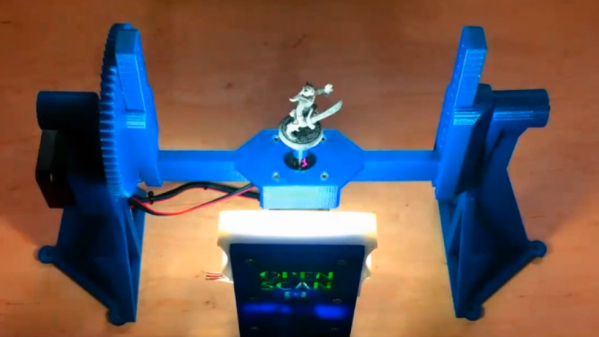

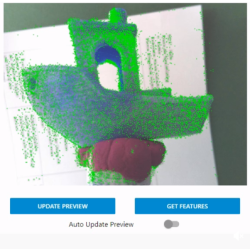

While products like Microsoft’s Kinect have struggled to keep the consumer’s attention, depth sensing remains an enabling technology that opens possibilities and gives rise to interesting projects, like a headset that allows one to see the world through the eyes of a depth sensor.

The ability to easily and quickly gain an understanding of the physical layout of a space is a powerful tool, and if a system like this one can deliver such fantastic results with nothing more than two RGB cameras, that’s a great sign. Watch it in action in the video below.

Continue reading “Dual RGB Cameras Get Depth Sensing Powerup”