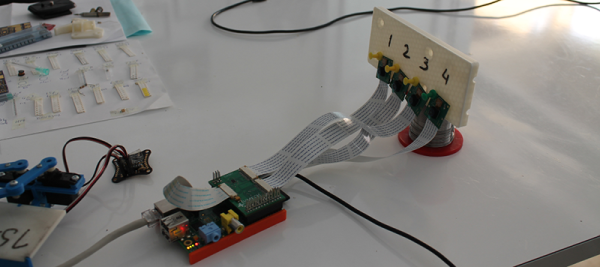

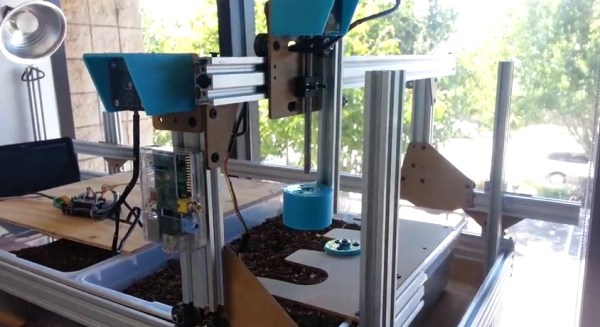

The Raspberry Pi and its cool camera add-on is a great way to send images and video up to the Intertubes, but what if you want to monitor more than one scene? The IVPort can multiplex up to sixteen of these Raspi camera modules, giving the Pi sixteen different views on the world and a ridiculously high stack of boards connected to the GPIO header.

The Raspberry Pi’s CSI interface uses high-speed data lines from the camera to the CPU to get a lot of image data quickly. Controlling the camera, on the other hand, uses regular old GPIOs, the same kind that are broken out on the header. We’ve seen builds that reuse these GPIOs to blink a LED, but with a breakout board with additional camera connectors, it’s possible to use normal GPIO lines in place of the camera port GPIOs.

The result is a stackable extension board that splits the camera port in twain, allowing four Raspi cameras to be connected. Stack another board on top and you can add four more cameras. A total of four of these boards can be stacked together, multiplexing sixteen Raspberry Pi cameras.

As far as the obvious, ‘why’ question goes, there are a few interesting things you can do with a dozen or so computer controlled cameras. The obvious choice would be a bullet time camera rig, something this board should be capable of, given its time to switch between channels is only 50ns. Videos below.

The project featured in this post is

The project featured in this post is