It is said that Benjamin Franklin, while watching the first manned flight of a hot air balloon by the Montgolfier brothers in Paris in 1783, responded when questioned as to the practical value of such a thing, “Of what practical use is a new-born baby?” Dr. Franklin certainly had a knack for getting to the heart of an issue.

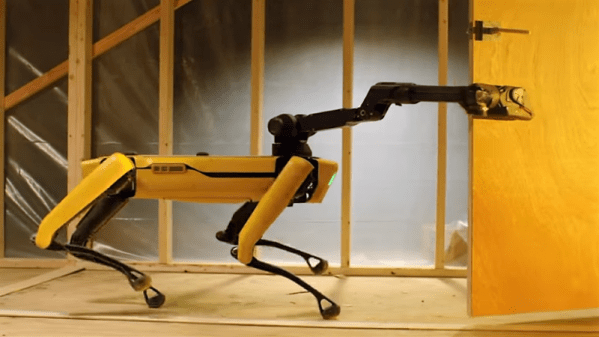

Much the same can be said for Spot, the extremely videogenic dog-like robot that Boston Dynamics has been teasing for years. It appears that the wait for a production version of the robot is at least partially over, and that Spot (once known as Spot Mini) will soon be available for purchase by “select partners” who “have a compelling use case or a development team that [Boston Dynamics] believe can do something really interesting with the robot,” according to VP of business development Michael Perry.

The qualification of potential purchasers will certainly limit the pool of early adopters, as will the price tag, which is said to be as much as a new car – and a nice one. So it’s not likely that one will show up in a YouTube teardown video soon, so until the day that Dave Jones manages to find one in his magic Australian dumpster, we’ll have to entertain ourselves by trying to answer a simple question: Of what practical use is a robotic dog?