We are often struck by how often we spend time trying to optimize something when we would be better off just picking a better algorithm. There is the old story about the mathematician Gauss who, when in school, was given busy work to add the integers from 1 to 100. While the other students laboriously added each number, Gauss realized that 100+1 is 101 and 99 + 2 is also 101. Guess what 98 + 3 is? Of course, 101. So you can easily find that there are 50 pairs that add up to 101 and know the answer is 5,050. No matter how fast you can add, you aren’t likely to beat someone who knows that algorithm. So here’s a question: You have a large body of text and you want to search for it. What’s the best way?

software151 Articles

Who Is Thinking About Open Source Firmware?

Yesterday, we ran a post on NVIDIA’s announcement of open-source drivers for some of its most recent video cards. And Hackaday being huge proponents of open-source software and hardware, you’d think we’d be pouring the champagne. But it’s trickier than that.

Part of the reason that they are able to publish a completely new, open-source driver is that the secrets that they’d like to keep have moved into the firmware. So is the system as a whole more or less open? Yeah, maybe both.

With a more open interface between the hardware and the operating system, the jobs of people porting the drivers to different architectures are going to be easier. Bugs that are in what is now the driver layer should get found and fixed faster. All of the usual open-source arguments apply. But at the same time, the system as a whole isn’t all that much more transparent. The irony about the new NVIDIA drivers is that we’ve been pushing them to be more open for decades, and they’ve responded by pushing their secrets off into firmware.

Secrets that move from software to firmware are still secrets, and even those among us who are the most staunch proponents of open source have closed hardware and firmware paths in our computers. Take the Intel Management Engine, a small computer inside your computer that’s running all the time — even while the computer is “off”. You’d like to audit the code for that? Sorry. And it’s not like it hasn’t had its fair share of security relevant bugs.

And the rabbit hole goes deeper, of course. No modern X86 chips actually run the X86 machine language instructions — instead they have a microcode interpreter that reads the machine language and interprets it to what the chip really speaks. This is tremendously handy because it means that chip vendors can work around silicon bugs by simple pushing out a firmware update. But this also means that your CPU is running a secret firmware layer at core. This layer is of course not without bugs, some of which can have security relevant implications.

This goes double for your smartphone, which is chock-full of multiple processors that work more or less together to get the job done. So while Android users live in a more open environment than their iOS brethren, when you start to look down at the firmware layer, everything is the same. The top layer of the OS is open, but it’s swimming on top of an ocean of binary blobs.

How relevant any of this is to you might depend on what you intend to do with the device. If you’re into open source because you like to hack on software, having open drivers is a fantastic resource. If you’re looking toward openness for the security guarantees it offers, well, you’re out of luck because you still have to trust the firmware blindly. And if you’re into open source because the bugs tend to be found quicker, it’s a mix — while the top level drivers are made more inspectable, other parts of the code are pushed deeper into obscurity. Maybe it’s time to start paying attention to open source firmware?

NVIDIA Releases Drivers With Openness Flavor

This year, we’ve already seen sizeable leaks of NVIDIA source code, and a release of open-source drivers for NVIDIA Tegra. It seems NVIDIA decided to amp it up, and just released open-source GPU kernel modules for Linux. The GitHub link named open-gpu-kernel-modules has people rejoicing, and we are already testing the code out, making memes and speculating about the future. This driver is currently claimed to be experimental, only “production-ready” for datacenter cards – but you can already try it out!

The Driver’s Present State

Of course, there’s nuance. This is new code, and unrelated to the well-known proprietary driver. It will only work on cards starting from RTX 2000 and Quadro RTX series (aka Turing and onward). The good news is that performance is comparable to the closed-source driver, even at this point! A peculiarity of this project – a good portion of features that AMD and Intel drivers implement in Linux kernel are, instead, provided by a binary blob from inside the GPU. This blob runs on the GSP, which is a RISC-V core that’s only available on Turing GPUs and younger – hence the series limitation. Now, every GPU loads a piece of firmware, but this one’s hefty!

Barring that, this driver already provides more coherent integration into the Linux kernel, with massive benefits that will only increase going forward. Not everything’s open yet – NVIDIA’s userspace libraries and OpenGL, Vulkan, OpenCL and CUDA drivers remain closed, for now. Same goes for the old NVIDIA proprietary driver that, I’d guess, would be left to rot – fitting, as “leaving to rot” is what that driver has previously done to generations of old but perfectly usable cards. Continue reading “NVIDIA Releases Drivers With Openness Flavor”

From Car To Device: How Software Is Changing Vehicle Ownership

For much of the last century, the ownership, loving care, and maintenance of an aged and decrepit automobile has been a rite of passage among the mechanically inclined. Sure, the battle against rust and worn-out parts may eventually be lost, but through that bond between hacker and machine are the formative experiences of motoring forged. In middle-age we wouldn’t think of setting off across the continent on a wing and a prayer in a decades-old vehicle, but somehow in our twenties we managed it. The Drive have a piece that explores how technological shifts in motor vehicle design are changing our relationship with cars such that what we’ve just described may become a thing of the past. Titled “The Era of ‘the Car You Own Forever’ Is Coming to an End“, it’s well worth a read.

At the crux of their argument is that carmakers are moving from a model in which they produce motor vehicles that are simply machines, into one where the vehicles are more like receptacles for their software. In much the same way as a smartphone is obsolete not necessarily through its hardware becoming useless but through its software becoming unmaintained, so will the cars of the future. Behind this is a commercial shift as the manufacturers chase profits and shareholder valuations, and a legal change in the relationship between customer and manufacturer that moves from ownership of a machine into being subject to the terms of a software license.

This last should be particularly concerning to all of us, after all if we’re expected to pay tens of thousands of dollars for a car it’s not unreasonable to expect that it will continue to serve us at our convenience rather than at that of its manufacturer.

If you’re a long-time Hackaday reader, you may remember that we’ve touched on this topic before.

Header image: Carolyn Williams, CC BY 2.0.

Tiny RISC Virtual Machine Is Built For Speed

Most of us are familiar with virtual machines (VMs) as a way to test out various operating systems, reliably deploy servers and other software, or protect against potentially malicious software. But virtual machines aren’t limited to running full server or desktop operating systems. This tiny VM is capable of deploying software on less powerful systems like the Raspberry Pi or AVR microcontrollers, and it is exceptionally fast as well.

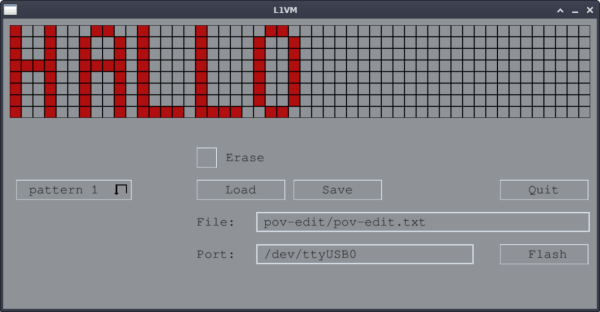

The virtual machine is built from scratch, including the RISC processor with only 61 opcodes, a 64 bit core, and runs code written in his own programming language called “Brackets” or in assembly. It’s designed to be modular, so only those things needed for a given application are loaded into the VM. With these design criteria it turns out to be up to seven times as fast as comparably small VMs like NanoVM. The project’s creator, [koder77], has even used its direct mouse readout and joystick functionality to control a Raspberry Pi 3D camera robot.

For anyone looking to add an efficient VM to a small computing environment, [koder77] has made the project open-source on his GitHub page. This also includes all of the modules he has created so far which greatly expand the project’s capabilities. For some further reading on exceedingly tiny virtual machines, we featured this project way back in 2012 which allows users to run Java on similar hardware.

90s Apple Computer Finally Runs Unsigned Code

Back in the 90s, the console wars were in full swing. Nintendo vs Sega was an epic showdown at first, but when Nintendo seemed sure to clench the victory Sony came out of nowhere with the PlayStation. While these were the most popular consoles at the time, there were a few others around that are largely forgotten by history even if they were revolutionary in some ways. An example is the Pippin, a console made by Apple, which until now has been unable to run any software not signed by Apple.

The Pippin was Apple’s only foray into gaming consoles, but it did much more than that and included a primitive social networking system as well as the ability to run Apple’s Macintosh operating system. The idea was to be a full media center of sorts, and the software that it would run would be loaded from the CD-ROM at each boot. [Blitter] has finally cracked this computer, allowing it to run custom software, by creating an authentication file which is placed on the CD to tell the Pippin that it is “approved” by Apple.

The build log goes into incredible detail on the way these machines operated, and if you have a Pippin still sitting around it might be time to grab it out of the box and start customizing it in the way you probably always wanted to. For those interested in other obscure Apple products, take a look at this build which brings modern WiFi to the Apple Newton, their early PDA.

Continue reading “90s Apple Computer Finally Runs Unsigned Code”

Open-Source Farming Robot Now Includes Simulations

Farming is a challenge under even the best of circumstances. Almost all conventional farmers use some combination of tillers, combines, seeders and plows to help get the difficult job done, but for those like [Taylor] who do not farm large industrial monocultures, more specialized tools are needed. While we’ve featured the Acorn open source farming robot before, it’s back now with new and improved features and a simulation mode to help rapidly improve the platform’s software.

The first of the two new physical features includes a fail-safe braking system. Since the robot uses electric geared hub motors for propulsion, the braking system consists of two normally closed relays which short the motor leads in emergency situations. This makes the motors see an extremely high load and stops them from turning. The robot also has been given advanced navigation facilities so that it can follow custom complex routes. And finally, [Taylor] created a simulation mode so that the robot’s entire software stack can be run in Docker and tested inside a simulation without using the actual robot.

For farmers who are looking to buck unsustainable modern agricultural practices while maintaining profitable farms, a platform like Acorn could be invaluable. With the ability to survey, seed, harvest, and even weed, it could perform every task of larger agricultural machinery. Of course, if you want to learn more about it, you can check out our earlier feature on this futuristic farming machine.