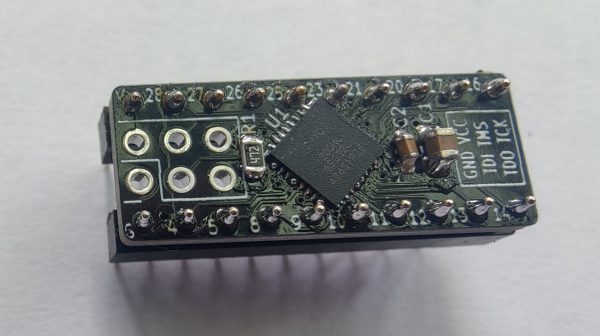

The Field-Programmable Gate Array (FPGA) is a powerful tool that is becoming more common across all kinds of different projects. They are effectively programmable hardware devices, capable of creating specific digital circuits and custom logic for a wide range of applications and can be much more versatile and powerful than a generic microcontroller. While they’re often used for rapid prototyping, they can also recreate specific integrated circuits, and are especially useful for retrocomputing. [nukeykt] has been developing a Sega Genesis clone using them, with some impressive results.

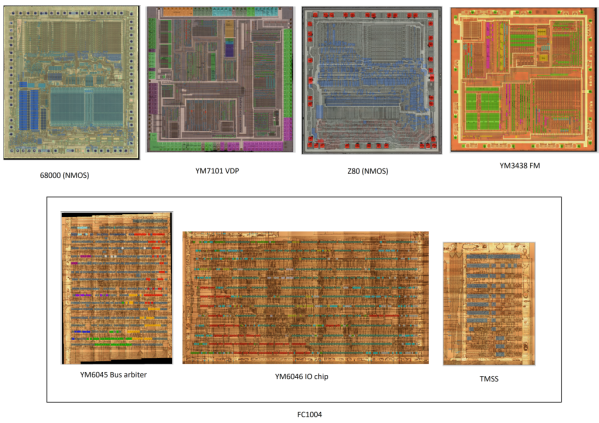

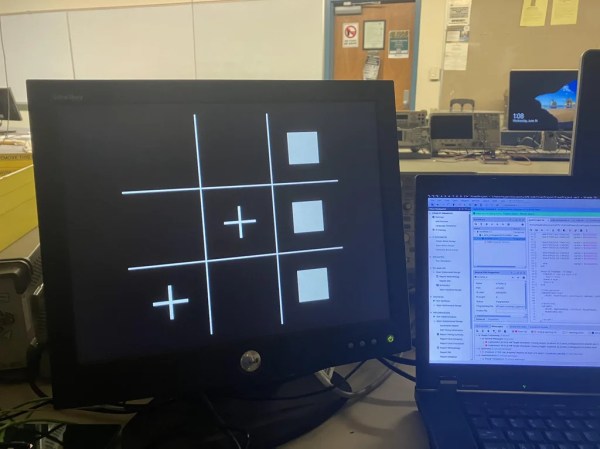

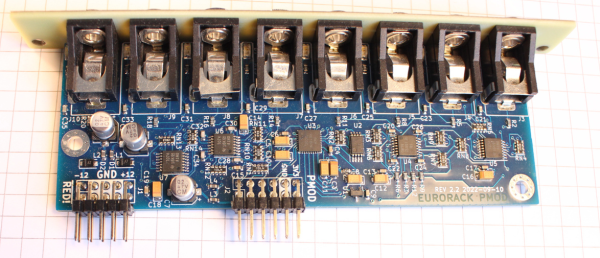

The Sega Genesis (or Mega Drive) was based around the fairly common Motorola 68000 processor, but this wasn’t the only processor in the console. There were a number of coprocessors including a Z80 and several chips from Yamaha to process audio. This project reproduces a number of these chips which are cycle-accurate using Verilog. The chips were recreated using images of de-capped original hardware, and although it doesn’t cover every chip from every version of the Genesis yet, it does have a version of the 68000, a Z80, and the combined Yamaha processor working and capable of playing plenty of games.

The project is still ongoing and eventually hopes to recreate the rest of the chipset using FPGAs. There’s also ongoing testing of the currently working chips, as some of them do still have a few bugs to work out. If you prefer to take a more purist approach to recreating 90s consoles, though, we recently featured a project which reproduced a Genesis development kit using original hardware.

Thanks to [Anonymous] for the tip!