Readers are likely familiar with photogrammetry, a method of creating 3D geometry from a series of 2D photos taken of an object or scene. To pull it off you need a lot of pictures, hundreds or even thousands, all taken from slightly different perspectives. Unfortunately the technique suffers where there are significant occlusions caused by overlapping elements, and shiny or reflective surfaces that appear to be different colors in each photo can also cause problems.

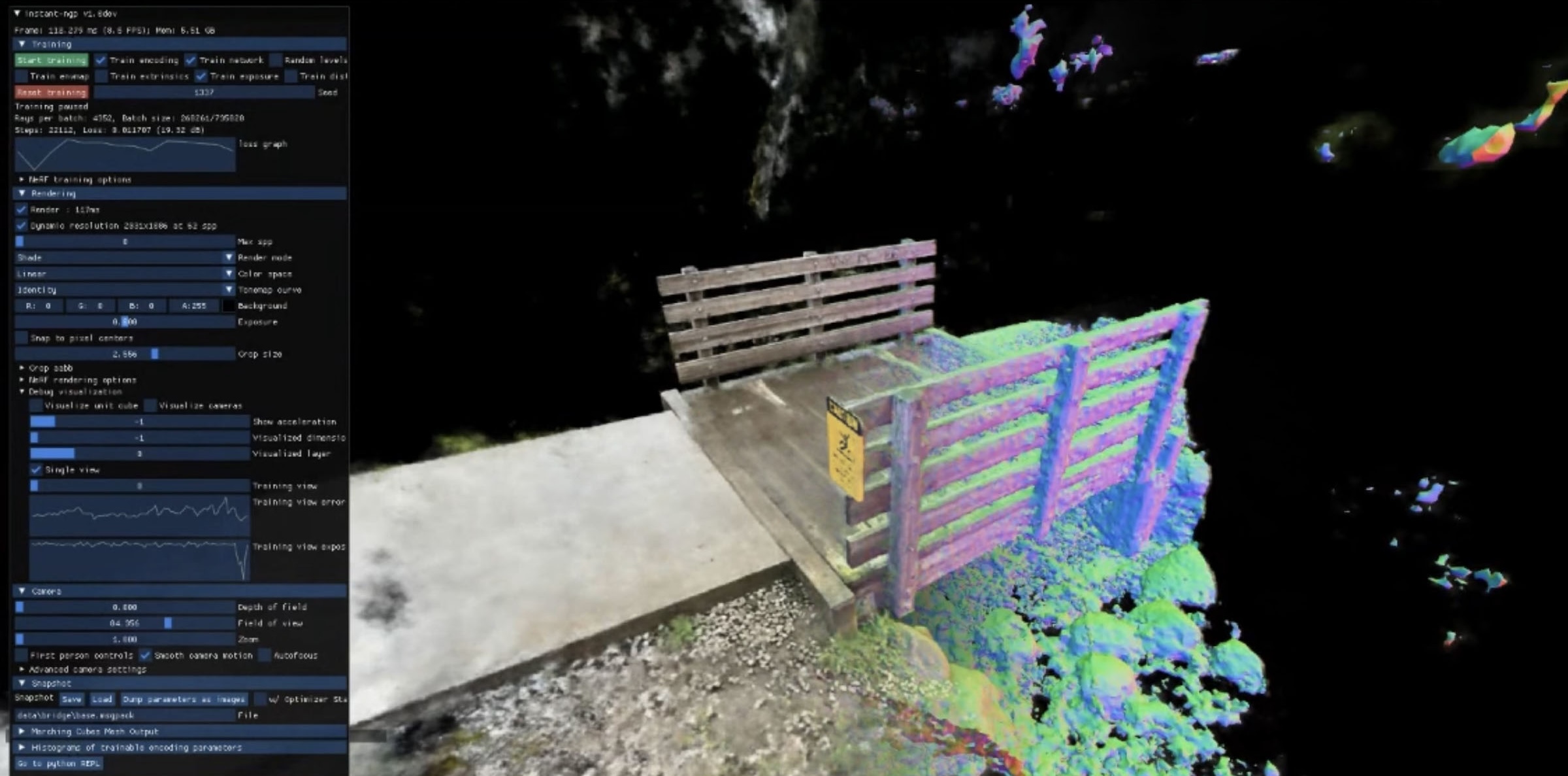

But new research from NVIDIA marries photogrammetry with artificial intelligence to create what the developers are calling an Instant Neural Radiance Field (NeRF). Not only does their method require far fewer images, as little as a few dozen according to NVIDIA, but the AI is able to better cope with the pain points of traditional photogrammetry; filling in the gaps of the occluded areas and leveraging reflections to create more realistic 3D scenes that reconstruct how shiny materials looked in their original environment.

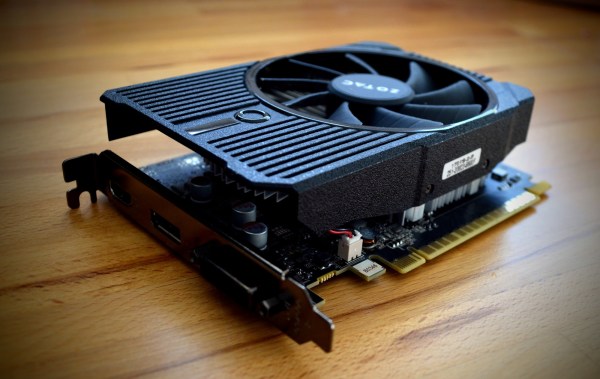

If you’ve got a CUDA-compatible NVIDIA graphics card in your machine, you can give the technique a shot right now. The tutorial video after the break will walk you through setup and some of the basics, showing how the 3D reconstruction is progressively refined over just a couple of minutes and then can be explored like a scene in a game engine. The Instant-NeRF tools include camera-path keyframing for exporting animations with higher quality results than the real-time previews. The technique seems better suited for outputting views and animations than models for 3D printing, though both are possible.

Don’t have the latest and greatest NVIDIA silicon? Don’t worry, you can still create some impressive 3D scans using “old school” photogrammetry — all you really need is a camera and a motorized turntable.

Continue reading “NeRF: Shoot Photos, Not Foam Darts, To See Around Corners”