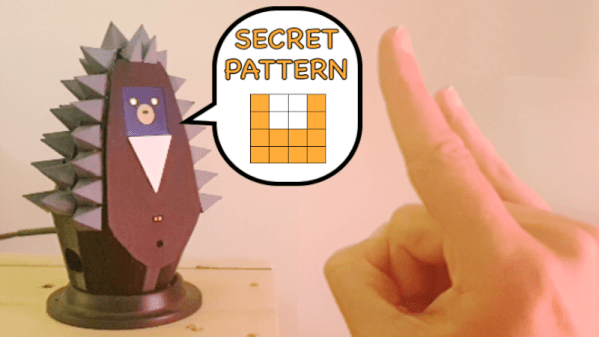

Traditionally, robot arms have been controlled either by joysticks, buttons, or very carefully programmed routines. However, for [Narongporn Laosrisin’s] homebrew build, they decided to go with gesture control instead.

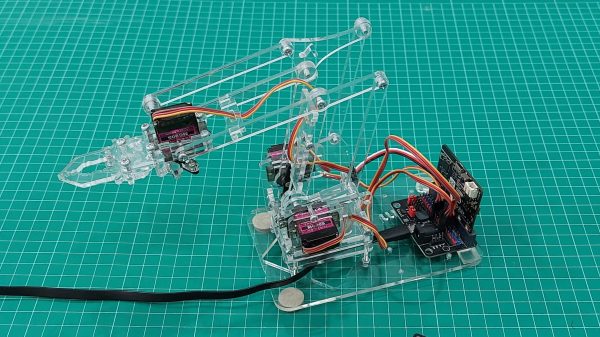

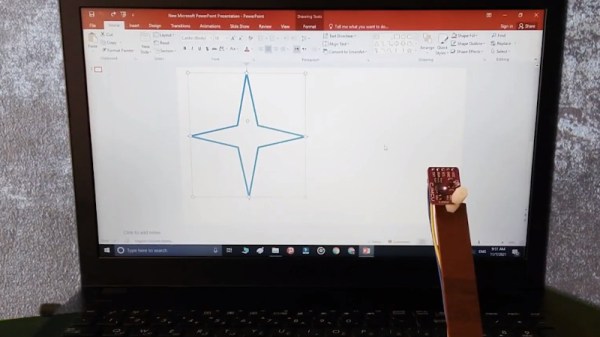

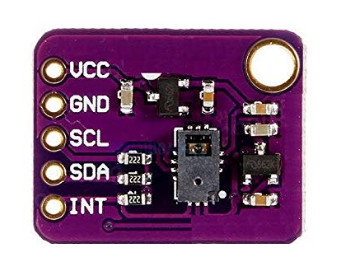

The MeArm robotic arm is built using laser cut acrylic parts, and can be had in a kit if so desired. It features four servo motors, charged with rotating the arm’s base, pushing the arm forwards and backwards, up and down, and actuating its gripper. The servos are under the command of a micro:bit microcontroller board, which itself receives signals from a second micro:bit which is strapped to the human wishing to control the arm. The second micro:bit detects gestures with its accelerometer, and then sends the relevant commands to the robotic arm’s micro:bit over its built-in radio link. The arm controller then commands the servos to execute the maneuver.

It may be a small robotic arm that doesn’t have the capacity to lift much, but that’s not the point. This project is a great way to teach students how to program microcontrollers, work with sensor inputs, and just generally how to solve engineering puzzles. To that end, it looks like [Narongporn] has a great project on hand for teaching their students. Video after the break.

Continue reading “Gesture-Controlled Robot Arm Is A Nifty Educational Build”