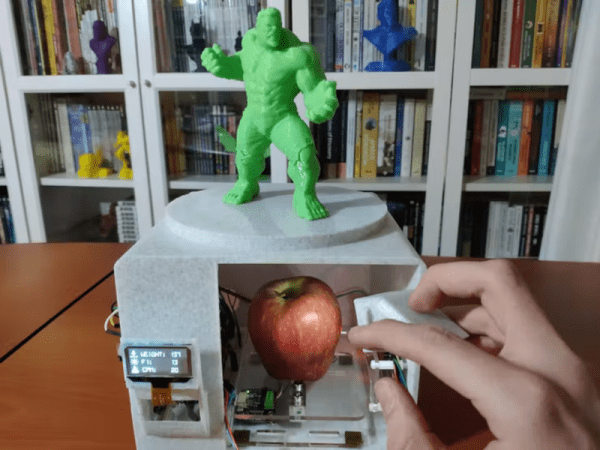

Diagnosing pipeline problems is important in industry to avoid costly or dangerous failures from cracked, broken, or damaged pipes. [Kutluhan Aktar] has built an system that uses AI to assist in this difficult task.

The core of the system is a MR60BHA1 60 GHz mmWave radar module, which is most typically used for breathing and heartrate detection. Here, it’s repurposed to detect fluctuating vibrations as a sign that a pipeline may be cracked or damaged. It’s paired with an Arduino Nicla Vision module, with the smart camera able to run a neural network model on the captured radar data to flag potential pipe defects and photograph them. The various modules are assembled on a PCB resembling Dragonite, the Dragon/Flying-type Pokemon.

[Kutluhan] walks us through the whole development process, including the creation of a web interface for the system. Of particular interest is the way the neural network was trained on real defect models that [Kutluhan] built using PVC pipe. We’ve looked at industrial pipelines in detail before, too. Video after the break.

Continue reading “Neural Network Helps With Radar Pipeline Diagnostics”