UEVR, or the Universal Unreal Engine VR Mod by [praydog] is made possible by some pretty neat software tricks. Reverse engineering concepts and advanced techniques used in game hacking are leveraged to add VR support, including motion controls, to applicable Unreal Engine games.

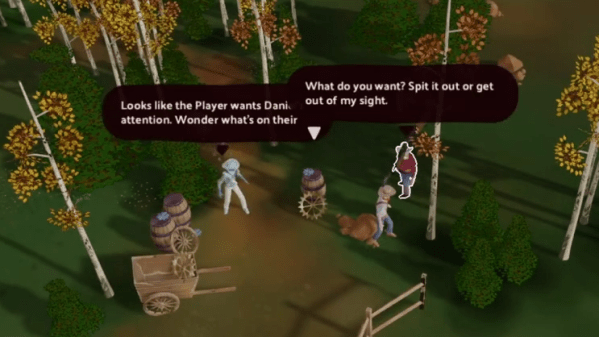

The UEVR project is a real-world application of various ideas and concepts, and the results are impressive. One can easily not only make a game render in VR, but it also handles managing the player’s perspective (there are options for attaching the camera view to game objects, for example) and also sensibly maps inputs from VR controllers to whatever the game is expecting. This isn’t the first piece of software that attempts to convert flatscreen software to VR, but it’s by far the most impressive.

There is an in-depth discussion of the techniques used to sensibly and effectively locate and manipulate game elements, not for nefarious purposes, but to enable impressive on-demand VR mods in a semi-automated manner. (Although naturally, some anti-cheat software considers this to be nefarious.)

Many of the most interesting innovations in VR rely on some form of modding, from magic in Skyrim that depends on your actual state of mind to adding DIY eye tracking to headsets in a surprisingly effective, modular, and low-cost way. As usual, to find cutting-edge experimentation, look to the modding community.