We’ve seen plenty of impressive robots of all sizes here at Hackaday, but recently we were particularly inspired by [Hans Jørgen Grimstad] and his thrifty mini sumo build.

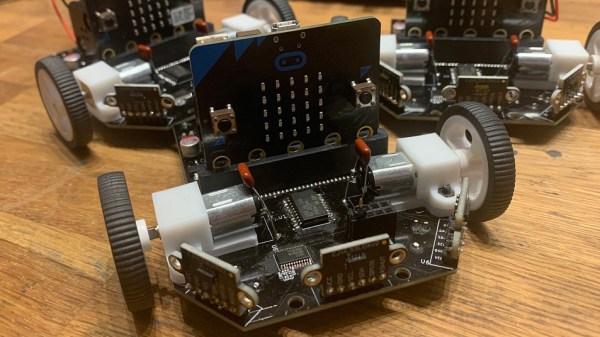

Using the BBC micro:bit platform as a starting point, Hans seized the opportunity to build a competitive mini sumo bot without breaking the bank. According to his blog, the enchanting little machine uses commonly available parts and cost around $30 when built in 2020 (or $50 according to the more recent video, perhaps taking into account the cost of hardware in these trying times).

The results can be seen in the video below. Some sacrifices were made – Hans admits that the 3.3 V linear regulator gets a little toasty, but the design is kept much simpler by doing away with a switching regulator. The 700 RPM N20 motors are wired directly up to the 6 V battery pack, giving this plucky wrestler plenty of sumo-smashing power.

Hans hopes that the build can lower barriers to entry for new builders in robot tournaments, being something that can easily be put together in a garage or local makerspace for a low, low price. The mini sumo form factor is a great beginner or amateur project, made even easier when makers like Hans put all the nitty-gritty details up on GitHub. This is certainly not the first accessible sumo robotics project that we have covered, and it won’t be the last. We hope we see loads more of these endearing robotic gladiators at future events.

Continue reading “Pint-sized Sumo Robot Is Adorable, Accessible And Totally Awesome”