Modern oscilloscopes are often loaded with features, but every now and then you run into a feature that seems easy to implement yet isn’t available. [kgsws] wanted to use his Rigol DS1074 to show live measurements in his YouTube videos, but found out that this scope doesn’t support video output. Not to be deterred, [kgsws] decided to add this feature himself. In the video embedded below, he describes in detail the process of adding a USB Video Capture (UVC) interface to his oscilloscope.

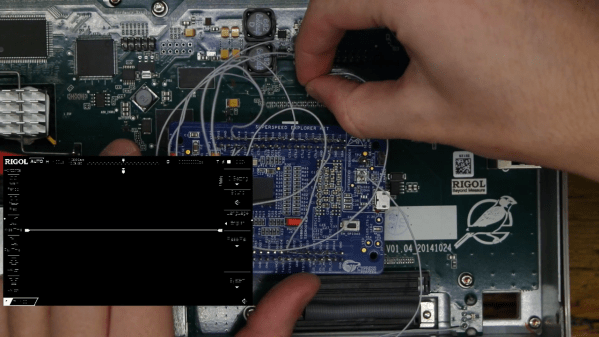

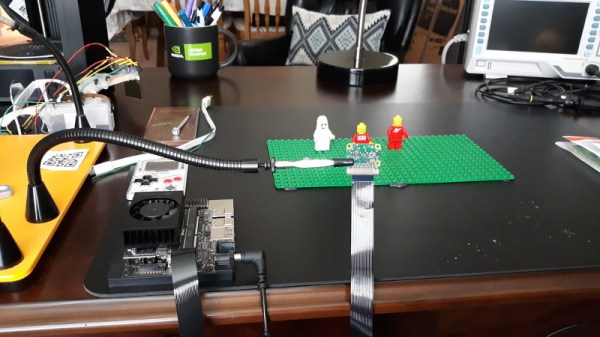

The basic idea was to find the signals going into the scope’s display and read them out using a Cypress EZ-USB board. This is a development board that can be used to design USB devices, and supports the UVC mode. However, with no documentation of any of the Rigol’s internal circuitry [kgsws] had to probe the display connector to find out which pin carried which signal. And since he had no other scope available than this Rigol, he hooked up the various bits of the disassembled instrument so that it could (awkwardly) probe its own internal signals.

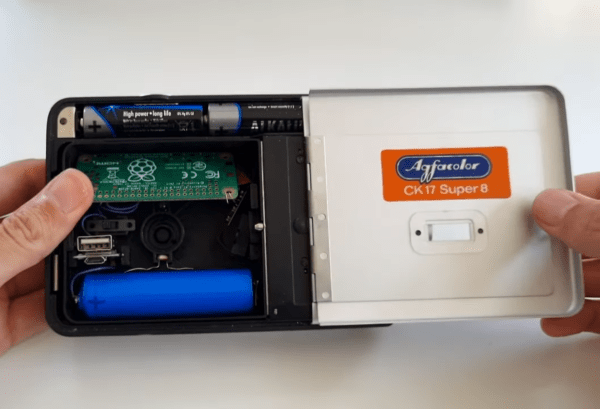

After mapping out its own display signals, it was time to hook them up to the EZ-USB board. [kgsws] achieved this by soldering about two dozen tiny wires to SMD pads on the motherboard. The EZ-USB board itself was placed in the back of the scope’s case, but had to be stripped of unneeded components in order to save space and power. A very clever trick was the addition of a reed switch, which allowed [kgsws] to set the EZ-USB board to programming mode without having to open the scope’s case, by simply holding a magnet near the switch.

After soldering a USB connector into a spare slot in the RF shield the project was complete. The Rigol can now be connected to a PC and will simply appear as a video capture device, ready to be streamed or captured for [kgsws]’s future project videos. We’ve seen other hacks on the Rigol DS1000Z series to capture a series of screenshots or to enable additional bandwidth and features, but adding a live video output was not one of the options so far. Continue reading “Oscilloscope Probes Itself To Add Video”