The Raspberry Pi, although first intended as an inexpensive single-board computer for use in education, is now ubiquitous in electronics communities. Its low price as well as Linux platform and accessible GPIO make it useful in many places outside the classroom. But, if you want to abandon the ease-of-use in favor of an even lower price, the Raspberry Pi foundation makes that possible as well with the RP2040 chip, commonly found on the Pico. [Jason] shows us one way to make use of this powerful chip by putting one in an audio digital signal processing board.

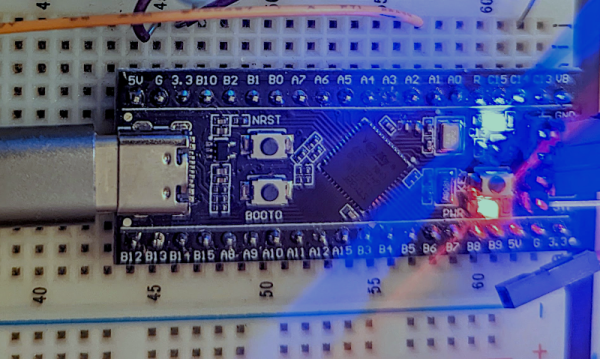

While development boards are available for this chip, [Jason] has opted instead for a custom PCB which he designed himself and includes an integrated headphone amplifier and 3.5 mm audio jacks. To do the actual DSP work, the RP2040 chip uses three 12-bit ADC channels and 16 controllable PWM channels. The platform is also equipped with the TLV320AIC3254 codec from Texas Instruments. With all of this put together, he has a functioning open-source platform he calls the DS-Pi.

[Jason] has built this as a platform for guitar effects and as a customizable guitar amp modeler, but with a platform that is Arduino-compatible and fairly easy to program it could be put to use for anything involving other types of music or audio processing, like this specialized MIDI-compatible guitar effects platform which is built around the same processor.