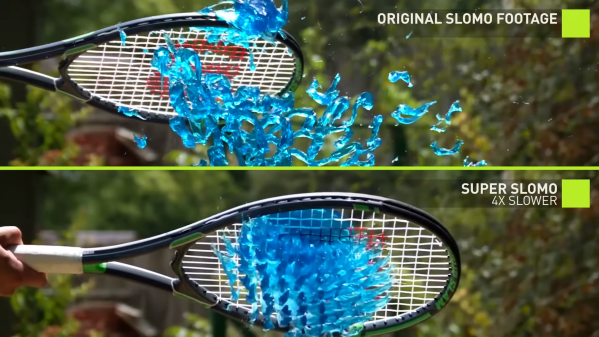

Nvidia is back at it again with another awesome demo of applied machine learning: artificially transforming standard video into slow motion – they’re so good at showing off what AI can do that anyone would think they were trying to sell hardware for it.

Though most modern phones and cameras have an option to record in slow motion, it often comes at the expense of resolution, and always at the expense of storage space. For really high frame rates you’ll need a specialist camera, and you often don’t know that you should be filming in slow motion until after an event has occurred. Wouldn’t it be nice if we could just convert standard video to slow motion after it was recorded?

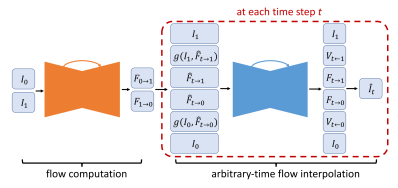

That’s just what Nvidia has done, all nicely documented in a paper. At its heart, the algorithm must take two frames, and artificially create one or more frames in between. This is not a manual algorithm that interpolates frames, this is a fully fledged deep-learning system. The Convolutional Neural Network (CNN) was trained on over a thousand videos – roughly 300k individual frames.

Since none of the parameters of the CNN are time-dependent, it’s possible to generate as many intermediate frames as required, something which sets this solution apart from previous approaches. In some of the shots in their demo video, 30fps video is converted to 240fps; this requires the creation of 7 additional frames for every pair of consecutive frames.

The video after the break is seriously impressive, though if you look carefully you can see the odd imperfection, like the hockey player’s skate or dancer’s arm. Deep learning is as much an art as a science, and if you understood all of the research paper then you’re doing pretty darn well. For the rest of us, get up to speed by wrapping your head around neural networks, and trying out the simplest Tensorflow example.

Continue reading “Nvidia Transforms Standard Video Into Slow Motion Using AI”