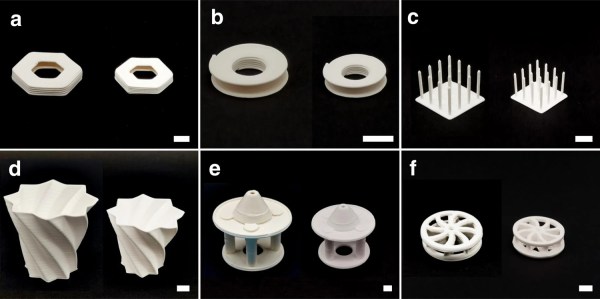

3D printing is at its most accessible (and most affordable) when printing in various plastics or resin. Printers of this sort are available for less than the cost of plenty of common power tools. Printing in materials other than plastic, though, can be a bit more involved. There are printers now for various metals and even concrete, but these can be orders of magnitude more expensive than their plastic cousins. And then there are materials which haven’t really materialized into a viable 3D printing system. Ceramic is one of those, and while there are some printers that can print in ceramic, this latest printer makes some excellent strides in the technology.

Existing technology for printing in ceramic uses a type of ceramic slurry as the print medium, and then curing it with ultraviolet light to solidify the material. The problem with ultraviolet light is that it doesn’t penetrate particularly far into the slurry, only meaningfully curing the outside portions. This can lead to problems, especially around support structures, with the viability of the prints. The key improvement that the team at Jiangnan University made was using near-infrared light to cure the prints instead, allowing the energy to penetrate much further into the material for better curing. This also greatly reduces or eliminates the need for supports in the print.

The paper about the method is available in full at Nature, documenting all of the details surrounding this new system. It may be a while until this method is available to a wider audience, though. If you can get by with a print material that’s a little less exotic, it’s not too hard to get a metal 3D printer, as long as you are familiar with a bit of electrochemistry.