While earlier smartphones seemed to manage well enough with individual applications that only weighed in at a few megabytes, a perusal of the modern smartphone software store uncovers some positively monstrous file sizes. The fact that we’ve become accustomed to mobile applications requiring 100+ MB downloads on what’s often a metered Internet connection in only a few short years is pretty crazy if you stop to think about it.

Seeing reports that the Nest app for iOS tipped the scales at nearly 250 MB, [Alexandre Colucci] decided to investigate. On his blog he not only documents the process of taking the application apart piece by piece to find out just what’s eating up all that space, but lists some potential fixes which could shave a bit off the top. Even if you aren’t planning a spelunking expedition into your pocket supercomputer’s particular variant of the Netflix app, the methodology and tools he uses here are fascinating in their own right and might be something worth adding to your software bag of tricks.

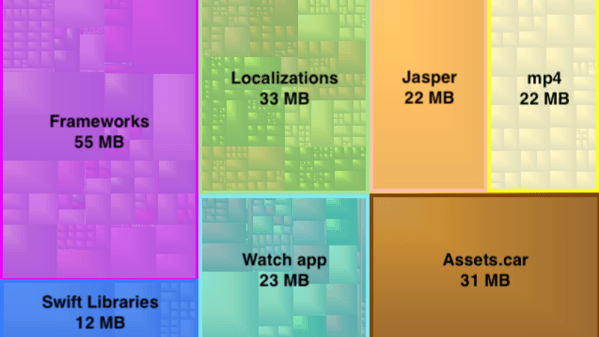

By passing the application’s files through a disk usage visualizer called GrandPerspective, [Alexandre] immediately identified some rather large blocks of content. The bundled Apple Watch version of the app takes up 23 MB, video and audio used to walk the user through the device setup weigh in at 22 MB, and localization files for various languages consumes a surprising 33 MB. But the biggest single contributor to the application’s heft is the assorted libraries and frameworks which total up to an incredible 67 MB.

Of course the question is, how much of it is really necessary? It’s hard to be sure from an outsider’s perspective, but [Alexandre] notes that a few of the libraries used seem to be redundant or obsolete. In some cases this could be the result of old code still lurking in the project, but the four different libraries used for user tracking probably aren’t in there by accident. It also stands to reason that the instructional videos could be offloaded to something like YouTube, so that only users who need to view them have to expend their bandwidth on it.

Of course the question is, how much of it is really necessary? It’s hard to be sure from an outsider’s perspective, but [Alexandre] notes that a few of the libraries used seem to be redundant or obsolete. In some cases this could be the result of old code still lurking in the project, but the four different libraries used for user tracking probably aren’t in there by accident. It also stands to reason that the instructional videos could be offloaded to something like YouTube, so that only users who need to view them have to expend their bandwidth on it.

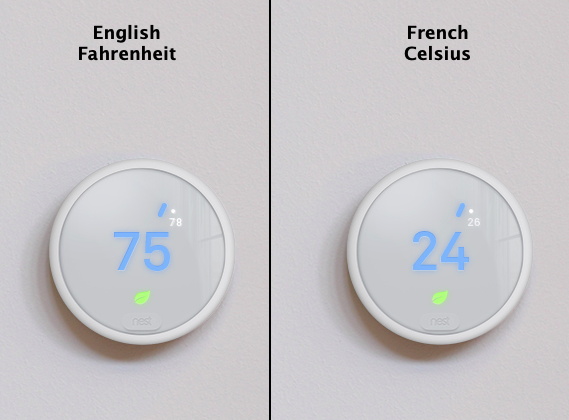

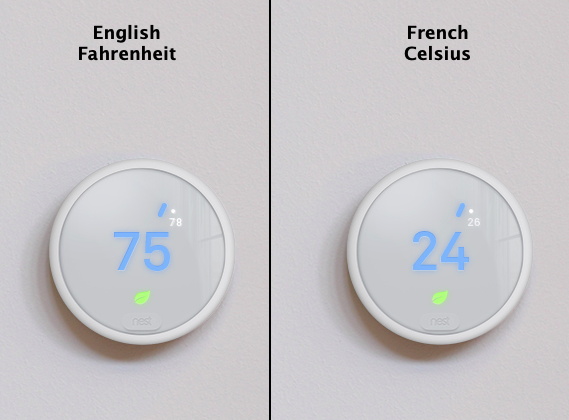

Getting a little deeper into things, [Alexandre] notes that some of the localization images appear to be redundant. As a specific example, he points to the images of the Nest itself displaying Fahrenheit and Celsius temperatures. While logically this should only be two image files, there are actually eight copies of the Celsius image, each filed away as language-specific. These redundant localization images could easily be stripped out, but with gains measured in only a few hundred kilobytes, it probably wasn’t considered worth the effort during development.

In the end there’s really not as much bloat as we might like to believe. There were some redundant files, maybe a few questionable library inclusions, and the Apple Watch version of the app could surely be separated out. All together, it might get you a savings of 30 – 40%, but still not enough to bring it down under 100 MB.

All signs point to the fact that modern smartphone software development is just a lot more burdensome than us hackers might like. Save for projects looking to put control back into the hand’s of the users, it looks like mobile operating systems aren’t going to be slimming down anytime soon.

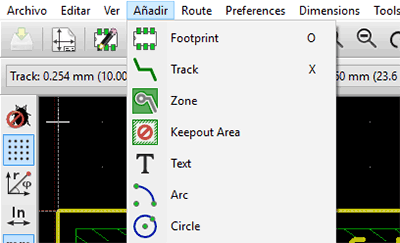

Mientras que ha habido otros intentos por localizar KiCad a otros idiomas, la mayoría de estos proyectos se encuentran incompletos. En una actualización de KiCad hace algunos meses, la localización al español ya contaba con algunas cadenas ya traducidas, pero no demasiadas. Los esfuerzos de [ElektroQuark] han acercado KiCad a millones de hablantes nativos de español, no solo algunos de sus menús.

Mientras que ha habido otros intentos por localizar KiCad a otros idiomas, la mayoría de estos proyectos se encuentran incompletos. En una actualización de KiCad hace algunos meses, la localización al español ya contaba con algunas cadenas ya traducidas, pero no demasiadas. Los esfuerzos de [ElektroQuark] han acercado KiCad a millones de hablantes nativos de español, no solo algunos de sus menús.