Self-driving is currently the Holy Grail in the automotive world, with a number of companies racing to build general-purpose autonomous vehicles that can get from point A to point B with no user input. While no one has brought one to market yet, at least one has promised this feature and had customers pay for it, but continually moved the goalposts for delivery due to how challenging this problem turns out to be. But it doesn’t need to be that hard or expensive to solve, at least in some situations.

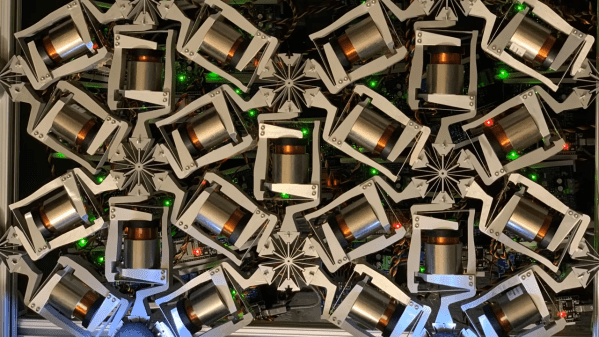

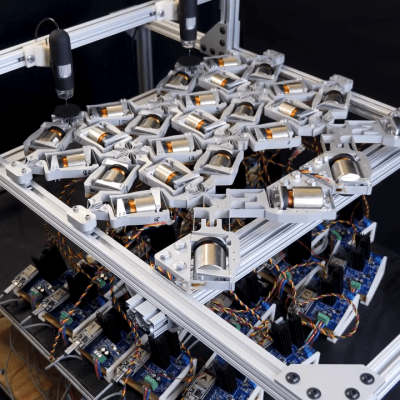

The situation in question is driving on a single stretch of highway, and only focuses on steering, so it doesn’t handle the accelerator or brake pedal input. The highway is driven normally, using a webcam to take images of the route and an Arduino to capture data about the steering angle. The idea here is that with enough training the Arduino could eventually steer the car. But first some math needs to happen on the training data since the steering wheel is almost always not turning the car, so the Arduino knows that actual steering events aren’t just statistical anomalies. After the training, the system does a surprisingly good job at “driving” based on this data, and does it on a budget not much larger than laptop, microcontroller, and webcam.

Admittedly, this project was a proof-of-concept to investigate machine learning, neural networks, and other statistical algorithms used in these sorts of systems, and doesn’t actually drive any cars on any roadways. Even the creator says he wouldn’t trust it himself, but that he was pleasantly surprised by the results of such a simple system. It could also be expanded out to handle brake and accelerator pedals with separate neural networks as well. It’s not our first budget-friendly self-driving system, either. This one makes it happen with the enormous computing resources of a single Android smartphone.