DOOM will forever be remembered as one of the founding games of the entire FPS genre. It also stands as a game which has long been a fertile ground for hackers and modders. [Nick Bild] decided to bring gesture control to iD’s classic shooter, courtesy of machine learning.

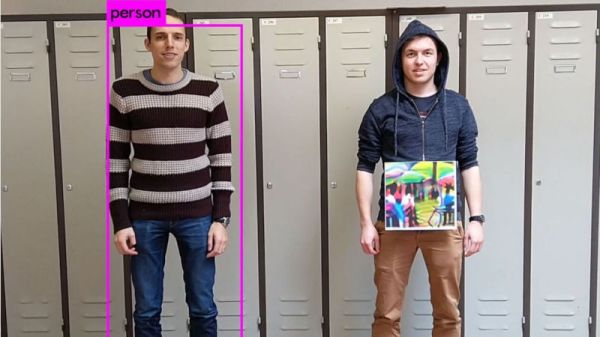

The setup consists of a Jetson Nano fitted with a camera, which films the player and uses a convolutional neural network to recognise the player’s various gestures. Once recognised, an API request is sent to a laptop playing Doom which simulates the relevant keystrokes. The laptop is hooked up to a projector, creating a large screen which allows the wildly gesturing player to more easily follow the action.

The neural network was trained on 3300 images – 300 per gesture. [Nick] found that using a larger data set actually performed less well, as he became less diligent in reliably performing the gestures. This demonstrates that quality matters in training networks, as well as quantity.

Reports are that the network is fairly reliable, and it appears to work quite well. Unfortunately, playability is limited as it’s not possible to gesture for more than one key at once. Overall though, it serves as a tidy example of how to do gesture recognition with CNNs.

If you’re not convinced by this demonstration, you might be interested to learn that neural networks can also be used to name tomatoes. If you don’t want to roll your own pose detection, check out this selfie drone that uses CMU’s OpenPose library. Video after the break.

Continue reading “Gesture Controlled Doom” →