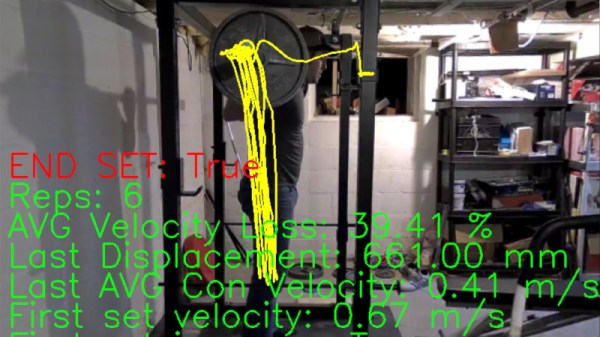

In the world of weight training, the buzzword of the moment is VBT, or Velocity Based Training. This involves sensors being used to measure the speed and position of a weight as it moves through each repetition, and thus provide instant feedback for the athlete and glean information from which they can work upon their training routine. Typically the sensors involved may be accelerometers, but [Kris] has taken a different tack using a webcam and machine vision to do the same job.

The barbell has a green disc attached to its end, and the software tracks it and measures the velocity. It issues a warning when the velocity of a repetition drops below a preset level, telling the athlete to stop their set before pushing themselves too far. Under the hood is a Python script and OpenCV, and the write-up in his GitHub repository takes us through its camera calibration to remove the effects of distortion, and set-up. All calibration of distances within the image is made through the known size of the green disc, allowing the software to accurately chart the distance through which it travels.

We’ve not seen a machine vision approach to weight training before, but we have seen one using accelerometers. Maybe this project will re-ignite interest in this field.