For [Jay] and [Ricardo]’s final project for [Dr. Bruce Land]’s ECE4760 course at Cornell, they tackled a problem that is the bane of all machinists. Their project finds the XY zero of a part in a CNC machine using computer vision, vastly reducing the time it take to set up a workpiece and giving us yet another reason to water down the phrase ‘Internet of Things’ by calling this the Internet of CNC Machines.

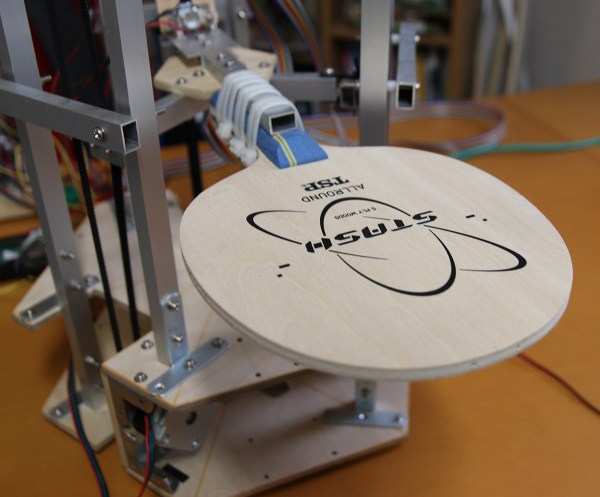

For the hardware, [Jay] and [Ricardo] used a PIC32 to interface with an Arducam module, a WiFi module, and an inductive sensor for measuring the distance to the workpiece. All of this was brought together on a PCB specifically designed to be single-sided (smart!), and tucked away in an enclosure that can be easily attached to the spindle of a CNC mill. This contraption looks down on a workpiece and uses OpenCV to find the center of a hole in a fixture. When the center is found, the mill is zeroed on its XY axis.

The software is a bit simpler than a device that has OpenCV processing running on a microcontroller. Detecting the center of the bore, for instance, happens on a laptop running a few Python scripts. The mill attachment communicates with the laptop over WiFi, and sends a few images of the downward-facing camera over to the laptop. From there, the laptop detects the center of the bore in the fixture plate and generates some G-code to send over to the mill.

While the device works remarkably well, and is able to center the mill fairly quickly and without a lot of user intervention, there were a few problems. The camera is not perfectly aligned with the axis of the spindle, making the math harder than it should be. Also, the enclosure isn’t rated for being an environment where coolant is sprayed everywhere. Those are small quibbles, and these problems could be fixed simply by designing and printing another enclosure. The device works, though, and really cuts down on the time it takes to zero out a mill.

You can check out the video description of the build below.

Continue reading “Zeroing CNC Mills With OpenCV” →