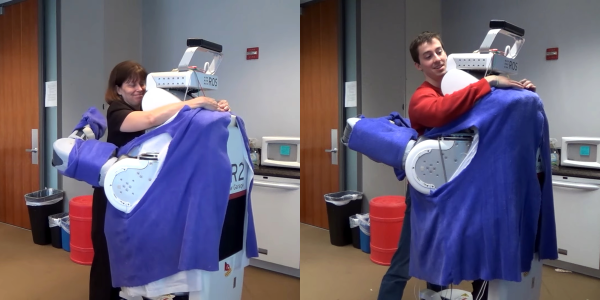

Roomba aside, domestic robots are still in search of the killer app they need to really take off. For the other kind of home automation to succeed, designers are going to have to find the most odious domestic task and make it go away at the push of the button. A T-shirt folding robot is probably a good first step.

First and foremost, hats off to [] for his copious documentation on this project. Not only are complete instructions for building the laundry bot listed, but there’s also a full use-case analysis and even a complete exploration of prior art in the space. [Stefano]’s exhaustive analysis led to a set of stepper-actuated panels, laser-cut from thin plywood, and arranged to make the series of folds needed to take a T-shirt from flat to folded in just a few seconds.

The video below shows the folder in action, and while it’s not especially fast right now, we’ll chalk that up to still being under development. We can see a few areas for improvement; making the panels from acrylic might make the folded shirt slide off the bot better, and pneumatic actuators might make for quicker movements and sharper folds. The challenges to real-world laundry folding are real, but this is a great start, and we’ll be on the lookout for improvements.

Continue reading “Laundry Bot Tackles The Tedium Of T-Shirt Folding”