In the home computer market of the 1980s, there were several winners that are still household names four decades later: the Commodore 64, the Apple II and the Sinclair Spectrum, to name a few. But where there are winners, there are bound to be losers as well – the Mattel Aquarius being a good example. A price war between the bigger players, combined with a rather poor hardware design, meant that the Aquarius was discontinued just a few months after its introduction in 1983. However, this makes it exactly the type of obscure machine that [Leaded Solder] likes to tinker with, so he was happy to finally get his hands on a neat specimen listed on eBay. He wrote an interesting blog post detailing his efforts to connect this old beast to a modern TV.

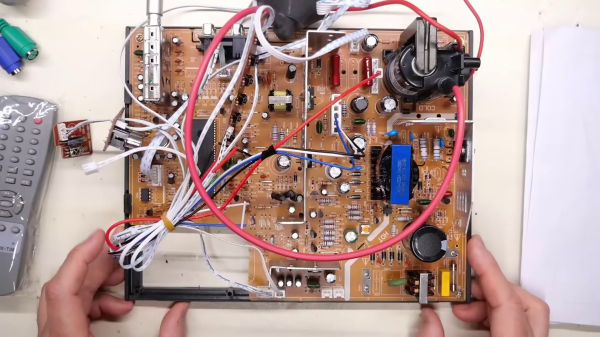

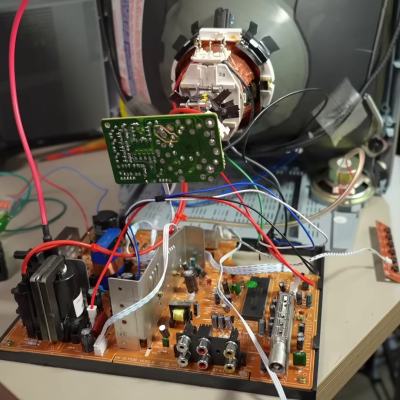

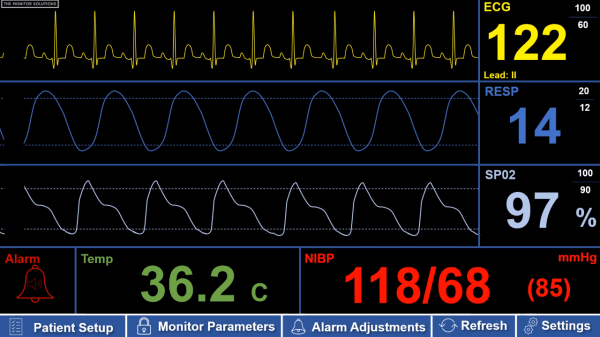

The main issue with the Aquarius is that it only has an RF video output, which results in a rather poor rendition of its already very limited graphics capabilities. Luckily, there is a fix available in the form of a composite A/V adapter that’s an almost plug-and-play upgrade. The only thing you need to do, as [Leaded Solder] illustrates in his blog post, is open up the computer, desolder the RF modulator and solder the A/V adapter in its place. Getting to that point was a bit tricky due to heavy EMI shields that were fixed in place with lots of solder, requiring liberal use of a desoldering iron. Continue reading “Adding Composite Video To The Mattel Aquarius”