A couple of decades ago one of *the* smartphone accessories to have was a Bluetooth keyboard which projected the keymap onto a table surface where letters could be typed in a virtual space. If we’re honest, we remember them as not being very good. But that hasn’t stopped the idea from resurfacing from time to time.

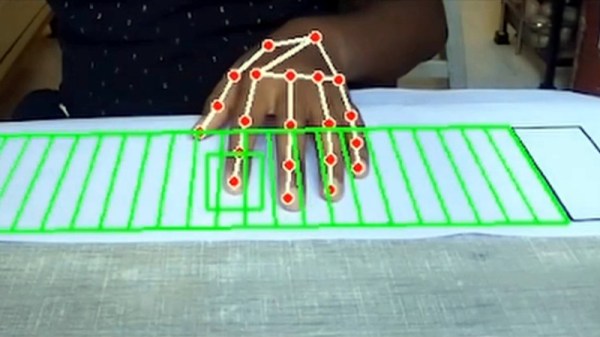

We’re reminded of it by [Mayuresh1611]’s paper piano, in which a virtual piano keyboard is watched over by a webcam to detect the player’s fingers such that the correct note from a range of MP3 files is delivered.

The README is frustratingly light on details other than setup, but a dive into the requirements reveals OpenCV as expected, and TensorFlow. It seems there’s a training step before a would-be virtual virtuoso can tinkle on the non-existent ivories, but the demo shows that there’s something playable in there. We like the idea, and wonder whether it could also be applied to other instruments such as percussion. A table as a drum kit would surely be just as much fun.

This certainly isn’t the first touch piano we’ve featured, but we think it may be the only one using OpenCV. A previous one used more conventional capacitive sensors.