The new hotness in microcontrollers is the ESP32. This chip, developed by Espressif, is the follow-on to the very popular ESP8266, the cheap, low-power, very capable WiFi-enabled microcontroller that came on the scene a few years ago. The ESP32 is another beast entirely with two powerful cores, WiFi and Bluetooth, and peripherals galore. You can even put an NES emulator in there.

While the ESP32 is significantly more powerful, it has for now been contained in modules. What would really be cool is a single chip loaded up with integrated flash, filter caps, a clock, all on a 7x7mm QFN package. Meet the ESP32-Pico-D4 (PDF). It is, effectively, an ESP32 on a chip. It’s just the ticket if you’re trying to cram wireless, fast microcontroller wizardry into a small package.

At its heart, the ESP32-Pico is your normal ESP32 module with a Tensilica dual-core LX6 microcoprocessor, 448 kB of ROM, 520 kB of SRAM, 4 MB of Flash (it can support up to 16 MB), Wireless with 802.11 b/g/n and Bluetooth 4.2, and a cornucopia of peripherals that include an SD card, UART, SPI, SDIO, LED and motor PWM, I2S, I2C, cap touch sensors, and a Hall effect sensor. It’s quite literally everything you could ever want in a microcontroller.

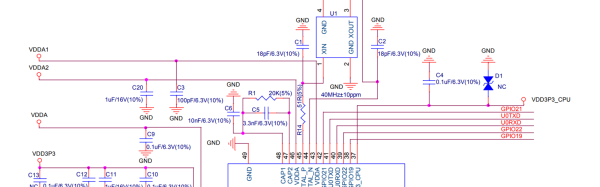

Disregarding the just barely hand-solderable package and the need for a PCB antenna, the ESP32-Pico requires very few support components. Really, the only thing going on in the reference schematic is a bunch of bypass caps. This is, by far, the easiest and smallest method to put WiFi, Bluetooth, and a powerful microcontroller in a project. It will surely be a very, very popular chip for hobbyist electronics for years to come. Of course, it will be even more popular if Espressif also manages to put this chip in a QFP package in addition to the QFN.

Unfortunately, apart from the PDF released by Espressif, the details on the EPS32-on-a-chip are sparse. We don’t know when we’ll be able to get our grubby hands on a tray, tube, or reel of these chips. That said, there’s enough information here to start designing a breakout board. Have at it — we’d love to see what the community comes up with.

Shout out to [Dave] for the tip.