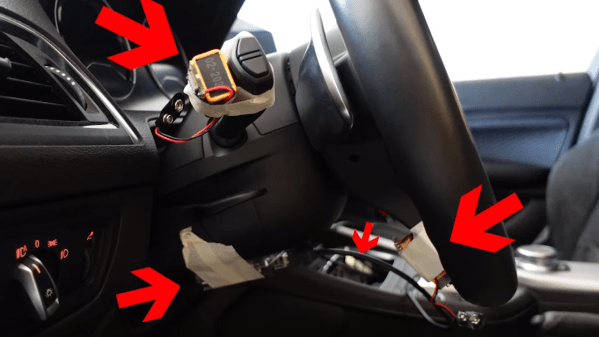

These days, our automobiles sport glittering consoles adorned with dials and digits to keep us up-to-date with our car’s vitals. In the future, though, perhaps we just wont need such vast amounts of information at our fingertips if our cars are driving themselves around. No information? How will we tell the car what to do? On that end, [Felix] has us covered with Stewart, a tactile gesture-input interface for the modern, self driving car.

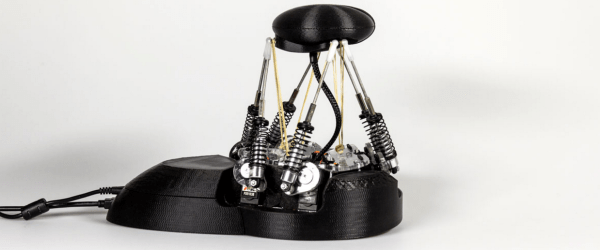

Stewart is a 6-DOF “Stewart Interface” capable of both gesture input and haptic-output. Gesture input enables the car’s passenger to deliver driving suggestions to the car. The gentle twist of a wrist can signal an upcoming turn at the next intersection; pulling back on Stewart’s head “joystick style” signals a “whoa–slow down, there, bub!” Haptic output via 6 servos pushes around Stewart’s head in the car’s intended direction. If the passenger agrees with the car, she can let Stewart gesture itself in the desired direction; if she disagrees; she can veto the car’s choices by moving her hand directly against Stewart’s current output gesture. Overall, the interface unites the intentions of the car and the intentions of the passenger with a haptic device that makes the connection feel seamless!

We know we’re not supposed to comment on the “how” with art projects–but we’re engineers–and this one makes us giddy with delight. We’re imagining those rc car shock absorbers dramatically dampening the jittery servos and giving the user a nice resistive feel. Interconnects are laser cut acrylic, and the shell is a smoothly contoured 3d print. We’ve seen Stewart Interfaces before, but nothing with the look-and-feel of a sleek design feature on its way to being dropped into the cockpit of our future self-driving cars.

Continue reading “Palm Interface Has You Suggest Where Self Driving Car Should Go”