Fail2ban is a great tool for dynamically blocking IP addresses that show bad behavior, like making repeated login attempts. It was just announced that a vulnerability could allow an attacker to take over a machine by being blocked by Fail2ban. The problem is in the mail-whois action, where an email is sent to the administrator containing the whois information. Whois information is potentially attacker controlled data, and Fail2ban doesn’t properly sterilize the input before piping it into the mail binary. Mailutils has a feature that uses the tilde key as an escape sequence, allowing commands to be run while composing a message. Fail2ban doesn’t sanitize those tilde commands, so malicious whois data can trivially run commands on the system. Whois is one of the old-school unix protocols that runs in the clear, so a MItM attack makes this particularly easy. If you use Fail2ban, make sure to update to 0.10.7 or 0.11.3, or purge any use of mail-whois from your active configs. Continue reading “This Week In Security: Fail2RCE, TPM Sniffing, Fishy Leaks, And Decompiling”

Streaming Video From A Mouse

The first optical mice had to be used on a specially printed mousepad with a printed grid that the four-quadrant infrared sensor could detect. Later, mice swapped the infrared sensor for an optoelectric module (essentially a tiny, very low-resolution camera) and a powerful image processing. [8051enthusiast] was lying in bed one day when they decided to crack the firmware in their gaming mouse and eventually start streaming frames from the camera inside.

Step one was to analyze the protocol between the mouse and the host machine. Booting up a Windows VM and Wireshark allowed him to capture all the control transfers to the USB controller. Since it was a “programmable” gaming mouse that allowed a user to set macros, [8051enthusiast] could use the control transfers that would normally query that macro that had been set to return the memory at an arbitrary location. A little bit of tinkering later, and he now had a dump of the firmware. Looking at the most abundant bytes, it seems to match a profile similar to the Intel 8051. In a fascinating blur of reverse engineering, he traced the main structure of the program back from the function that sets the LED colors for the scroll wheel (which is dependent on the current DPI setting). Unfortunately, the firmware prevented the same macro mechanism from writing to arbitrary locations.

Looking through the code, a good old buffer overflow exploit seemed possible, but it caused the system to reset via watchdog. So he took another approach, invoking recovery mode and loading an entirely new firmware on the device, which a set_report control transfer can invoke.

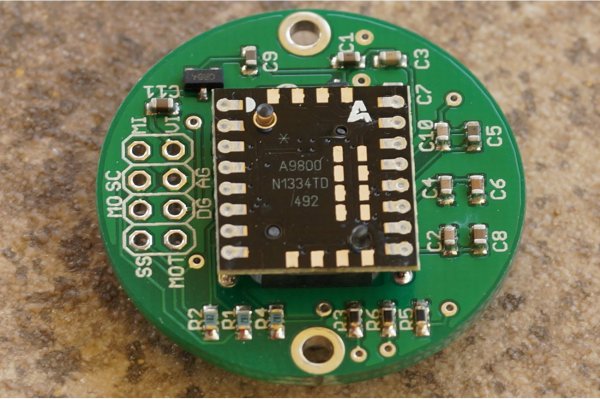

Next, he moved onto the ADNS-9800 optical sensor (pictured in the top image provided by JACK Enterprises), which had a large encrypted blob in the firmware. Some poking around and deduction lead to a guess that the optical sensor was another 8051 system. With some clever reasoning and sheer determination, [8051enthusiast] was able to crack the XOR stream cipher encryption with a program that showed him versions of the disassembled assembly and allowed him to pick the one that was the most likely. With the firmware decrypted, he was able to see the encryption code and confirm his deducted algorithm.

With the sensor now cracked open, it was onto the 30 x 30 240 fps video stream. The sensor communicates over SPI, and the USB controller has to bit-bang the connection as it doesn’t have the hardware. Putting two custom firmware images on with a few extra functions was easy enough, but the 7 fps was somewhat lacking. The first optimization was loop unrolling and removing some sleeps in the firmware, which bought it up to 34 fps. By measuring the cycle counts of individual instructions, he was able to find some alternatives such as a mov instead of a setb that took one less cycle. Going from a 17 cycle loop to an 11 cycle loop and some other optimizations gave him 54 fps. Not content to stop there, he modified the ADNS-9800 firmware to continuously sample rather than waiting for the USB controller to finish processing. While this yielded 100 fps, there was still more to do: image compression. At a whopping 230 fps, [8051enthusiast] decided to call it done.

However, there was one last thing he wanted to do: control the mouse with the video stream. Writing some image processing into his Python-based program that received the image files allowed him to use the mouse, however impractically.

All in all, it’s an incredible journey by [8051enthusiast], and we would highly recommend reading the whole journey yourself. This isn’t the first time he’s modified the firmware of 8051-based devices, such as modifying the firmware of the WiFi chipset in his laptop.

[Thanks to JACK Enterprises over at Tindie for the use of the image of an ADNS9000].

Here’s How To Sniff Out An LCD Protocol, But How Do You Look Up The Controller?

Nothing feels better than getting a salvaged component to do your bidding. But in the land of electronic displays, the process can quickly become a quagmire. For more complex displays, the secret incantation necessary just to get the things to turn on can be a non-starter. Today’s exercise targets a much simpler character display and has the added benefit of being able to sniff the data from a functioning radio unit.

When [Amen] upgraded his DAB radio he eyed the 16×2 character display for salvage. With three traces between the display and the controller it didn’t take long to trace out the two data lines using an oscilloscope. Turing on the scope’s decoding function verified his hunch that it was using I2C, and gave him plenty of data to work from. This included a device address, initialization string, and that each character was drawn on screen using two bytes on the data bus.

He says that some searching turned up the most likely hardware: a Winstar WO1602I-TFH- AT derived from an ST7032 controller. What we’re wondering is if there is a good resource for searching this kind of info? Our go-to is the LCD display and controller reference we covered here back in March. It’s a great resource, but turns up bupkis on this particular display. Are we relegated to using DuckDuckGo for initialization strings and hoping someone’s published a driver or a logic dump of these parts in the past, or is there a better way to go about this? Let us know in the comments!

Cloud-Based Atari Gaming

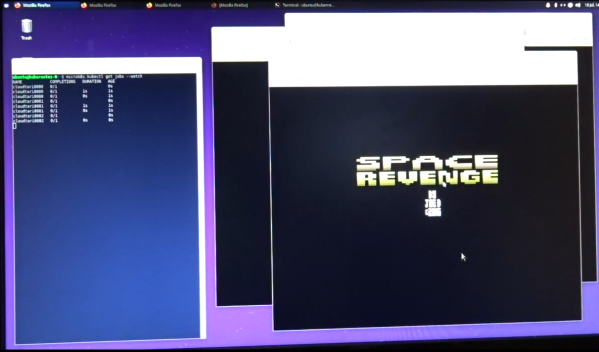

While the Google Stadia may be the latest and greatest in the realm of cloud gaming, there are plenty of other ways to experience this new style of gameplay, especially if you’re willing to go a little retro. This project, for example, takes the Atari 2600 into the cloud for a nearly-complete gaming experience that is fully hosted in a server, including the video rendering.

[Michael Kohn] created this project mostly as a way to get more familiar with Kubernetes, a piece of open-source software which helps automate and deploy container-based applications. The setup runs on two Raspberry Pi 4s which can be accessed by pointing a browser at the correct IP address on his network, or by connecting to them via VNC. From there, the emulator runs a specific game called Space Revenge, chosen for its memory requirements and its lack of encumbrance of copyrights. There are some limitations in that the emulator he’s using doesn’t implement all of the Atari controls, and that the sound isn’t available through the remote desktop setup, but it’s impressive nonetheless

[Michael] also glosses over this part, but the Atari emulator was written by him “as quickly as possible” so he could focus on the Kubernetes setup. This is impressive in its own right, and of course he goes beyond this to show exactly how to set up the cloud-based system on his GitHub page as well. He also thinks there’s potential for a system like this to run an NES setup as well. If you’re looking for something a little more modern, though, it is possible to set up a cloud-based gaming system with a Nintendo Switch as well.

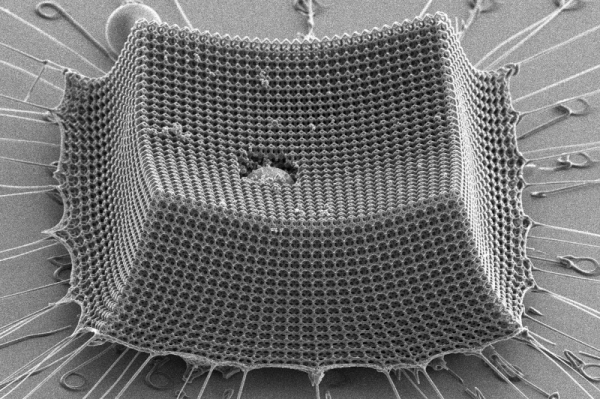

3D Printed Material Might Replace Kevlar

Prior to 1970, bulletproof vests were pretty iffy, with a history extending as far as the 1500s when there were attempts to make metal armor that was bulletproof. By the 20th century there was ballistic nylon, but it took kevlar to produce garments with real protection against projectile impact. Now a 3D printed nanomaterial might replace kevlar.

A group of scientists have published a paper that interconnected tetrakaidecahedrons made up of carbon struts that are arranged via two-photon lithography.

We know that tetrakaidecahedrons sound like a modern invention, but, in fact, they were proposed by Lord Kelvin in the 19th century as a shape that would allow things to be packed together with minimum surface area. Sometimes known as a Kelvin cell, the shape is used to model foam, among other things.

The 3D printing, in this case, is a form of lithography using precise lasers, so you probably won’t be making any of this on your Ender 3. However, the shape might have some other uses when applied to conventional 3D printing methods.

We’ve actually had an interest in the history of kevlar. Then again, kevlar isn’t the only game in town.

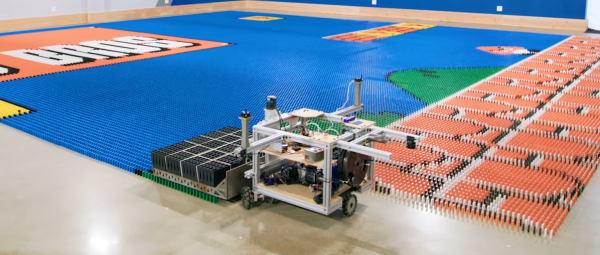

Create Large Scale Domino Art With A Robot

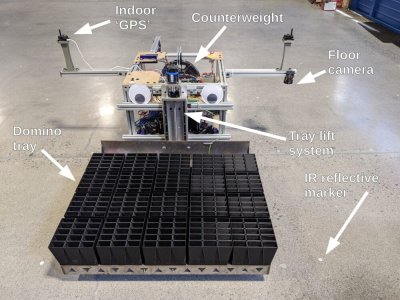

Creating large domino art displays is a long and nerve-racking process, where bumping a single domino can mean starting from scratch. To automate the process of creating these displays, a team consisting of [Mark Rober], [John Luke], [Josh], and [Alex Baucom] built the Dominator, a robot capable of laying 100 000 dominos just over 24 hours. Video after the break.

[Mark Rober] had been toying with the idea for a few years, and the project finally for off the ground after [Mark] mentioned it in a talk he gave at the 2019 Bay Area Maker Faire. To pull it off, the team created an entire domino laying system, including an automated loading station, a precision indoor positioning system, and the robot itself. The robot is built around a frame of aluminum extrusions, riding on three omnidirectional wheels driven by precision servo motors. A large tray mounted to the front of the robot can hold and release 300 dominos at a time. The primary controller is a Raspberry Pi 4, which receives positioning information from a Marvelmind indoor positioning system and a downward-facing IR camera that looks for reflective markers on the floor. The loading system uses a conveyor system to feed the different colored dominos to an industrial Kuka robot that drops them down a grid of tubes that can hold multiple layers at once.

Continue reading “Create Large Scale Domino Art With A Robot”

Pinewood Derby Scale Measures CG

If you suffer from nostalgia, you might remember carving a block of wood into a car, adding some wheels, and racing it against other contestants in a pinewood derby. Today’s derby is decidedly high tech though, and we were impressed with this car scale that also figures out the car’s center of gravity.

Based on an Arduino, of course, along with a pair of HX711 load cells. Why a pair? That’s how the device measures the center of gravity is by weighing the front and rear of the car separately.