Open Source software is always trustworthy, right? [Bertus] broke a story about a malicious Python package called “Colourama”. When used, it secretly installs a VBscript that watches the system clipboard for a Bitcoin address, and replaces that address with a hardcoded one. Essentially this plugin attempts to redirects Bitcoin payments to whoever wrote the “colourama” library.

Why would anyone install this thing? There is a legitimate package named “Colorama” that takes ANSI color commands, and translates them to the Windows terminal. It’s a fairly popular library, but more importantly, the name contains a word with multiple spellings. If you ask a friend to recommend a color library and she says “coulourama” with a British accent, you might just spell it that way. So the attack is simple: copy the original project’s code into a new misspelled project, and add a nasty surprise.

Sneaking malicious software into existing codebases isn’t new, and this particular cheap and easy attack vector has a name: “typo-squatting”. But how did this package get hosted on PyPi, the main source of community contributed goodness for Python? How many of you have downloaded packages from PyPi without looking through all of the source? pip install colorama? We’d guess that it’s nearly all of us who use Python.

It’s not just Python, either. A similar issue was found on the NPM javascript repository in 2017. A user submitted a handful of new packages, all typo-squatting on existing, popular packages. Each package contained malicious code that grabbed environment variables and uploaded them to the author. How many web devs installed these packages in a hurry?

Of course, this problem isn’t unique to open source. “Abstractism” was a game hosted on Steam, until it was discovered to be mining Monero while gamers were playing. There are plenty of other examples of malicious software masquerading as something else– a sizable chunk of my day job is cleaning up computers after someone tried to download Flash Player from a shady website.

Buyer Beware

In the open source world, we’ve become accustomed to simply downloading libraries that purport to do exactly the cool thing we’re looking for, and none of us have the time to pore through the code line by line. How can you trust them?

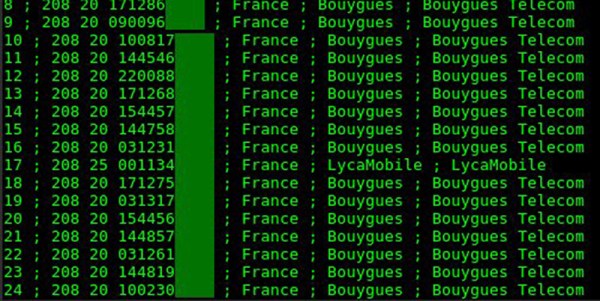

Repositories like PyPi do a good job of faithfully packaging the libraries and programs that are submitted to them. As the size of these repositories grow, it becomes less and less practical for every package to be manually reviewed. PyPi lists 156,750 projeccts. Automated scanning like [Bertus] was doing is a great step towards keeping malicious code out of our repositories. Indeed, [Bertus] has found eleven other malicious packages while testing the PyPi repository. But cleverer hackers will probably find their way around automated testing.

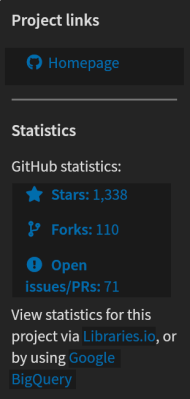

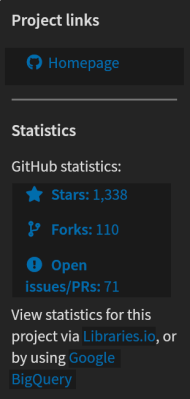

That the libraries are open source does add an extra layer of reliability, because the code can in principal be audited by anyone, anytime. As libraries are used, bugs are found, and features are added, more and more people are intentionally and unintentionally reviewing the code. In the “colourama” example, a long Base64 string was decoded and executed. It doesn’t take a professional researcher to realize something fishy is going on. At some point, enough people have reviewed a codebase that it can be reasonably trusted. “Colorama” has well over a thousand stars on Github, and 28 contributors. But did you check that before downloading it?

That the libraries are open source does add an extra layer of reliability, because the code can in principal be audited by anyone, anytime. As libraries are used, bugs are found, and features are added, more and more people are intentionally and unintentionally reviewing the code. In the “colourama” example, a long Base64 string was decoded and executed. It doesn’t take a professional researcher to realize something fishy is going on. At some point, enough people have reviewed a codebase that it can be reasonably trusted. “Colorama” has well over a thousand stars on Github, and 28 contributors. But did you check that before downloading it?

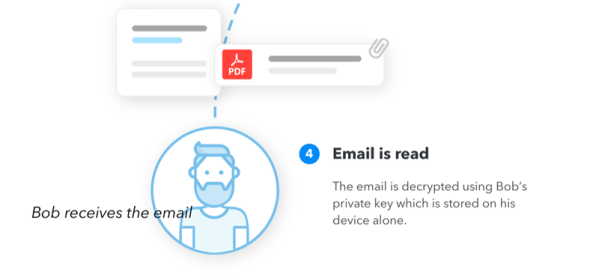

Typo-squatting abuses trust, taking advantage of a similar name and whoever isn’t paying quite close enough attention. It’s not practical for every user to check every package in their operating system. How, then, do we have any trust in any install? Cryptography solves some of these problems, but it cannot overcome the human element. A typo in a url, trusting a brand new project, or even obfuscated C code can fool the best of us from time to time.

What’s the solution? How do we have any confidence in any of our software? When downloading from the web, there are some good habits that go a long way to protect against attacks. Cross check that the project’s website and source code actually point to each other. Check for typos in URLs. Don’t trust a download just because it’s located on a popular repository.

But most importantly, check the project’s reputation, the number of contributors to the project, and maybe even their reputation. You wouldn’t order something on eBay without checking the seller’s feedback, would you? Do the same for software libraries.

A further layer of security can be found in using libraries supported by popular distributions. In quality distributions, each package has a maintainer that is familiar with the project being maintained. While they aren’t checking each line of code of every project, they are ensuring that “colorama” gets packaged instead of “colourama”. In contrast to PyPi’s 156,750 Python modules, Fedora packages only around 4,000. This selection is a good thing.

Repositories like PyPi and NPM are simply not the carefully curated sources of trustworthy software that we sometimes think them to be– and we should act accordingly. Look carefully into the project’s reputation. If the library is packaged by your distribution of choice, you can probably pass this job off to the distribution’s maintainers.

At the end of the day, short of going through the code line by line, some trust anchor is necessary. If you’re blindly installing random libraries, even from a “trustworthy” repository, you’re letting your guard down.