If you haven’t noticed, CRTs are getting hard to find. You can’t get them in Goodwill, because thrift stores don’t take giant tube TVs anymore. You can’t find them on the curb set out for the trash man, because they won’t pick them up. It’s hard to find them on eBay, because no one wants to ship them. That’s a shame, because the best way to enjoy old retrocomputers and game systems is with a CRT with RGB input. If you don’t already have one, the best you can hope for is an old CRT with a composite input.

But there’s a way. [The 8-Bit Guy] just opened up late 90s CRT TV and modded it to accept RGB input. That’s a monitor for your Apple, your Commodore, and a much better display for your Sega Genesis.

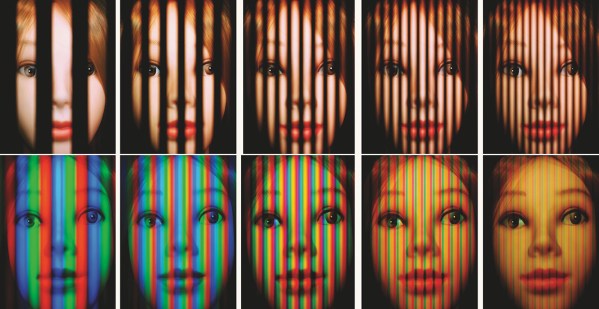

There are a few things to know before cracking open an old CRT and messing with the circuits. Every (color) CRT has three electron guns, one each for red, green, and blue. These require high voltage, and in CRTs with RGB inputs you’re looking at a circuit path that takes those inputs, amplifies them, and sends them to the gun. If the TV only has a composite input, there’s a bit of circuitry that takes that composite signal apart and sends it to the guns. In [8-bit guy]’s TV — and just about every CRT TV you would find from the mid to late 90s — there’s a ‘Jungle IC’ that handles this conversion, and most of the time there’s RGB inputs meant for the on-screen display. By simply tapping into those inputs, you can add RGB inputs with fancy-schmancy RCA jacks on the back.

While the actual process of adding RGB inputs to a late 90’s CRT will be slightly different for each individual make and model, the process is pretty much the same. It’s really just a little bit of soldering and then sitting back and playing with old computers that are finally displaying the right colors on a proper screen.