One of our favorite hacker-scavengers on YouTube, [The Post-Apocalyptic Inventor], has been connecting his Raspberry Pi up to nearly every display that he’s got in his well-stocked junk pile. (Video embedded below.)

Modern monitors with an HDMI input connect right up to the Pi. Before HDMI came VGA, but the Pi doesn’t do that natively. One solution is to use a composite-to-VGA converter and pull the composite signal out of the audio jack. Lacking the right 4-pole audio cable, [TPAI] soldered some RCA plugs directly onto the Pi, and plugged that into the converter. On a yet-older monitor, he faced a SCART adapter. If you’re European, you’ll know these — it’s just composite video with a different connector. Good thing he had a composite video signal already on hand.

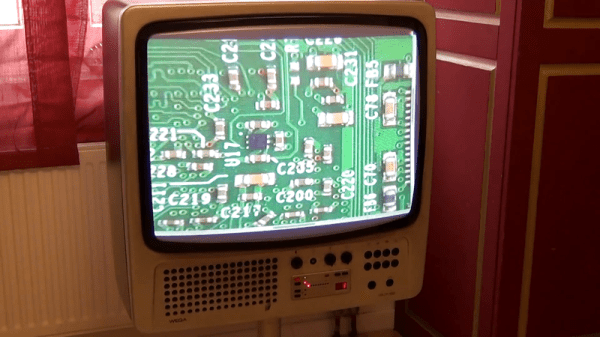

The pièce de resistance, though, was attaching the Pi to his 1980 Vega TV set. It only had an antenna-in connector, so he needed an RF modulator. With a (presumably) infinite supply of junk VCRs on hand, he pulled an upconverter out of the pile, and got the Pi working with the snazzy retro TV.

The pièce de resistance, though, was attaching the Pi to his 1980 Vega TV set. It only had an antenna-in connector, so he needed an RF modulator. With a (presumably) infinite supply of junk VCRs on hand, he pulled an upconverter out of the pile, and got the Pi working with the snazzy retro TV.