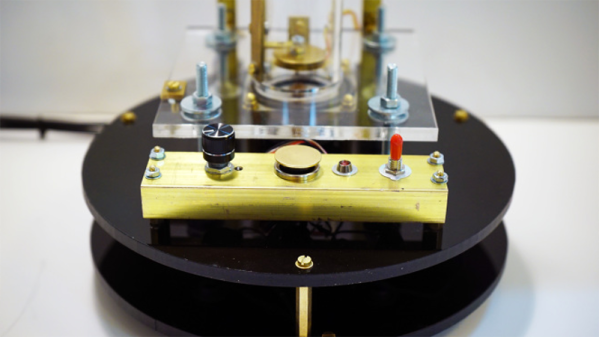

You think you like RGB LEDs? Columbus, OH art professor [Matthew Mohr] has more blinkenlove than you! His airport– convention-center-scale installation piece is an incredible 850,000 RGB LEDs wrapped around a 14-foot tall face-shaped sculpture that projection-maps participants’ faces onto the display. To capture images, there is also a purpose-built room with even illumination and a slew of Raspberry Pi cameras to take pictures of the person’s face from many angles simultaneously.

Besides looking pretty snazzy, the scale of this is just crazy. For instance, if you figure that the usual strip of 60 WS2812s can draw just about 9.6 watts full on, that scales up to 136 kW(!) for the big head. And getting the control signals right? Forgeddaboutit. Prof. [Mohr], if you’re out there, leave us some details in the comments.

(Edit: He did! And his website is back up after being DOSed. And they’re custom LEDs that are even brighter to compete with daylight in the space.)

What is it with airports and iconic LED art pieces? Does anyone really plan their stopovers to see public art? How many of you will fly through Columbus on purpose now?

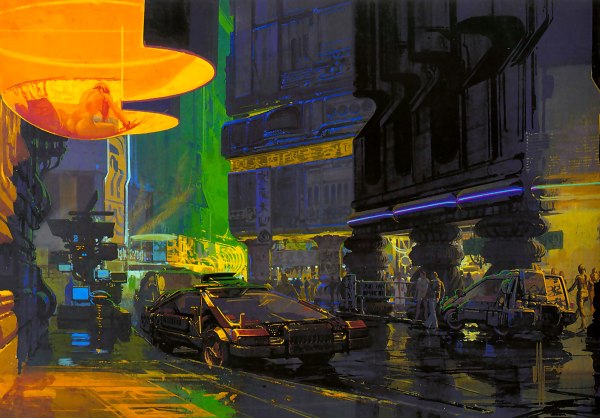

Meet Syd Mead as he presents a keynote talk at the

Meet Syd Mead as he presents a keynote talk at the