[Thomas Bitmatta] and two other champion drone pilots visited the Robotics and Perception Group at the University of Zurich. The human pilots accepting the challenge to race drones against Artificial Intelligence “pilots” from the UZH research group.

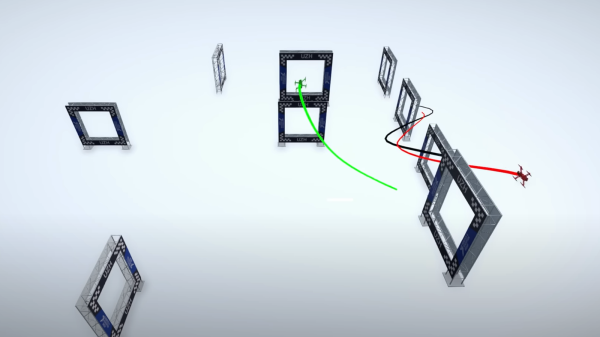

The human pilots took on two different types of AI challengers. The first type leverages 36 tracking cameras positioned above the flight arena. Each camera captures 400 frames per second of video. The AI-piloted drone is fitted with at least four tracking markers that can be identified in the captured video frames. The captured video is fed into a computer vision and navigation system that analyzes the video to compute flight commands. The flight commands are then transmitted to the drone over the same wireless control channel that would be used by a human pilot’s remote controller.

The second type of AI pilot utilizes an onboard camera and autonomous machine vision processing. The “vision drone” is designed to leverage visual perception from the camera with little or no assistance from external computational power.

Ultimately, the human pilots were victorious over both types AI pilots. The AI systems do not (yet) robustly accommodate unexpected deviation from optimal conditions. Small variations in operating conditions often lead to mistakes and fatal crashes for the AI pilots.

Both of the AI pilot systems utilize some of the latest research in machine learning and neural networking to learn how to fly a given track. The systems train for a track using a combination of simulated environments and real-world flight deployments. In their final hours together, the university research team invited the human pilots to set up a new course for a final race. In less than two hours, the AI system trained to fly the new course. In the resulting real-world flight of the AI drone, its performance was quite impressive and shows great promise for the future of autonomous flight. We’re betting on the bots before long.

Continue reading “Human Vs. AI Drone Racing At The University Of Zurich”