Most of us probably have a vision of how “The Robots” will eventually rise up and deal humanity out of the game. We’ve all seen that movie, of course, and know exactly what will happen when SkyNet becomes self-aware. But for those of you thinking we’ll get off relatively easy with a quick nuclear armageddon, we’re sorry to bear the news that AI seems to have other plans for us, at least if this report of dodgy AI-generated mushroom foraging manuals is any indication. It seems that Amazon is filled with publications these days that do a pretty good job of looking like they’re written by human subject matter experts, but are actually written by ChatGPT or similar tools. That may not be such a big deal when the subject matter concerns stamp collecting or needlepoint, but when it concerns differentiating edible fungi from toxic ones, that’s a different matter. The classic example is the Death Cap mushroom (Amanita phalloides) which varies quite a bit in identifying characteristics like color and size, enough so that it’s often tough for expert mycologists to tell it apart from its edible cousins. Trouble is, when half a Death Cap contains enough toxin to kill an adult human, the margin for error is much narrower than what AI is likely to include in a foraging manual. So maybe that’s AI’s grand plan for humanity — just give us all really bad advice and let Darwin take care of the rest.

chatbot30 Articles

Self-Hosted Chatbot Focuses On Privacy

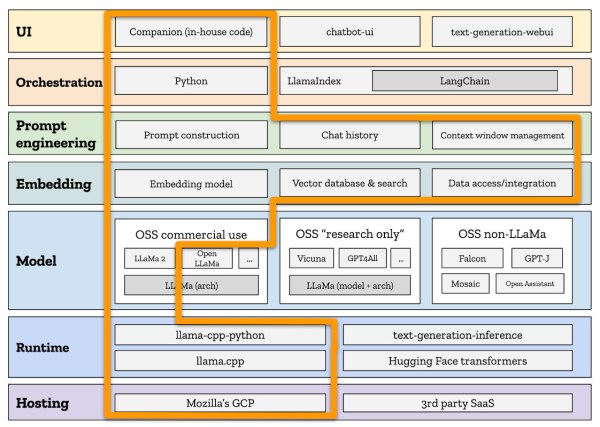

Large language models (LLMs) have been all the rage lately, assisting from all kinds of tasks from programming to devising Excel formulas to shortcutting school work. They’re also relatively easy to access for the most part, but as the old saying goes, if something on the Internet is free the real product is you (and your data). Luckily there are ways of hosting LLMs on your own to avoid your personal data getting harvested, as well as taking advantage of open-source solutions, but building these systems takes a little bit of effort. [Stephen] and a team from Mozilla walk us through this process and show us a number of options currently available.

Working from the ground up, the group first decides on hosting, which (unsurprisingly) involves using Mozilla hosting services. The choice of runtime environment was a little bit more challenging. The project was time constrained, so they looked at two options here: Hugging Face and llama.cpp. Eventually deciding to move forward with llama.cpp largely due to its ability to run on more consumer-oriented hardware (especially Apple silicon) and the fact that it doesn’t need a powerful GPU, the next task was to choose the model. Settling on the LLaMa model that Facebook recently open-sourced, this model works well with the runtime environment and is essentially the only one that does.

From there, the team at Mozilla wanted to make sure their chat bot would be able to provide other Mozilla employees with information more readily pertinent to their jobs, so they trained their model with some internal Mozilla data as well as other more generic information. This doesn’t mean the job is done, though, there are a number of other factors that went in to designing this system before it was finally complete. Even then, since they built this in a week it’s not perfect; there are some issues with non-permissive licensing of some of the components and many of the design choices may not have been ideal. It’s impressive what’s out there if you’re hosting your own system, though, and while this might be a little more advanced for a self-hosted project, take a look at some other more beginner-friendly projects you can try if you’re just starting out on the self-hosted path.

Ask Hackaday: The Turing Test Is Dead: Long Live The Turing Test!

Alan Turing proposed a test for machine intelligence that no longer works. The idea was to have people communicate over a terminal, with another real person and with a computer. If the computer is intelligent, Turing mused, most people will incorrectly identify the computer as a human. Clearly, with the advent of modern chatbots, that test is now broken. Despite the “AI” moniker, chatbots aren’t sentient or even pre-sentient, but they certainly seem that way. An AI CEO, Mustafa Suleyman, is proposing a new test: The AI has to take a $100,000 budget and earn $1,000,000.

We were a little bemused at this. By that measure, most of us aren’t intelligent, either, and it seems like this is a particularly capitalistic idea. We could probably write an Excel script that studied mutual fund performance and pull off the same trick, given enough time for the investment to mature. Is it intelligent? No. Besides, even humans who have demonstrated they can make $1,000,000 often sell their companies and start new ones that fail. How often does the AI have to succeed before we grant it person status?

Continue reading “Ask Hackaday: The Turing Test Is Dead: Long Live The Turing Test!”

What Do You Want In A Programming Assistant?

The Propellerheads released a song in 1998 entitled “History Repeating.” If you don’t know it, the lyrics include: “They say the next big thing is here. That the revolution’s near. But to me, it seems quite clear. That it’s all just a little bit of history repeating.” The next big thing today seems to be the AI chatbots. We’ve heard every opinion from the “revolutionize everything” to “destroy everything” camp. But, really, isn’t it a bit of history repeating itself? We get new tech. Some oversell it. Some fear it. Then, in the end, it becomes part of the ordinary landscape and seems unremarkable in the light of the new next big thing. Dynamite, the steam engine, cars, TV, and the Internet were all predicted to “ruin everything” at some point in the past.

History really does repeat itself. After all, when X-rays were discovered, they were claimed to cure pneumonia and other infections, along with other miracle cures. Those didn’t pan out, but we still use them for things they are good at. Calculators were going to ruin math classes. There are plenty of other examples.

This came to mind because a recent post from ACM has the contrary view that chatbots aren’t able to help real programmers. We’ve also seen that — maybe — it can, in limited ways. We suspect it is like getting a new larger monitor. At first, it seems huge. But in a week, it is just the normal monitor, and your old one — which had been perfectly adequate — seems tiny.

But we think there’s a larger point here. Maybe the chatbots will help programmers. Maybe they won’t. But clearly, programmers want some kind of help. We just aren’t sure what kind of help it is. Do we really want CoPilot to write our code for us? Do we want to ask Bard or ChatGPT/Bing what is the best way to balance a B-tree? Asking AI to do static code analysis seems to work pretty well.

So maybe your path to fame and maybe even riches is to figure out — AI-based or not — what people actually want in an automated programming assistant and build that. The home computer idea languished until someone figured out what people wanted to do with them. Video cassette didn’t make it into the home until companies figured out what people wanted most to watch on them.

How much and what kind of help do you want when you program? Or design a circuit or PCB? Or even a 3D model? Maybe AI isn’t going to take your job; it will just make it easier. We doubt, though, that it can much improve on Dame Shirley Bassey’s history lesson.

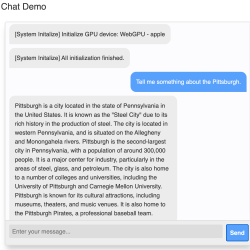

Chatting With Local AI Moves Directly In-Browser, Thanks To Web LLM

Large Language Models (LLM) are at the heart of natural-language AI tools like ChatGPT, and Web LLM shows it is now possible to run an LLM directly in a browser. Just to be clear, this is not a browser front end talking via API to some server-side application. This is a client-side LLM running entirely in the browser.

Running an AI system like an LLM locally usually leverages the computational abilities of a graphics card (GPU) to accelerate performance. This is true when running an image-generating AI system like Stable Diffusion, and it’s also true when implementing a local copy of an LLM like Vicuna (which happens to be the model implemented by Web LLM.) The thing that made Web LLM possible is WebGPU, whose release we covered just last month.

WebGPU provides a way for an in-browser application to talk to a local GPU directly, and it sure didn’t take long for someone to get the idea of using that to get a local LLM to run entirely within the browser, complete with GPU acceleration. This approach isn’t just limited to language models, either. The same method has been applied to successfully create Web Stable Diffusion as well.

It’s a fascinating (and fast) development that opens up new possibilities and, hopefully, gives people some new ideas. Check out Web LLM’s GitHub repository for a closer look, as well as access to an online demo.

ChatGPT Powers A Different Kind Of Logic Analyzer

If you’re hoping that this AI-powered logic analyzer will help you quickly debug that wonky digital circuit on your bench with the magic of AI, we’re sorry to disappoint you. But if you’re in luck if you’re in the market for something to help you detect logical fallacies someone spouts in conversation. With the magic of AI, of course.

First, a quick review: logic fallacies are errors in reasoning that lead to the wrong conclusions from a set of observations. Enumerating the kinds of fallacies has become a bit of a cottage industry in this age of fake news and misinformation, to the extent that many of the common fallacies have catchy names like “Texas Sharpshooter” or “No True Scotsman”. Each fallacy has its own set of characteristics, and while it can be easy to pick some of them out, analyzing speech and finding them all is a tough job.

Continue reading “ChatGPT Powers A Different Kind Of Logic Analyzer”

Hackaday Links: February 19, 2023

For years, Microsoft’s modus operandi was summed up succinctly as, “Extend and enhance.” The aphorism covered a lot of ground, but basically it seemed to mean being on the lookout for the latest and greatest technology, acquiring it by any means, and shoehorning it into their existing product lines, usually with mixed results. But perhaps now it’s more like, “Extend, enhance, and existential crisis,” after reports that the AI-powered Bing chatbot is, well, losing it.

At first, early in the week, we saw reports that Bing was getting belligerent with users, going so far as to call a user “unreasonable and stubborn” for insisting the year is 2023, while Bing insisted it was still 2022. The most common adjective we saw in this original tranche of stories was “unhinged,” and that seems to fit if you read the transcripts. But later in the week, a story emerged about a conversation a New York Times reporter had with Bing that went way over to the dark side, and even suggests that Bing may have multiple personas, which is just a nice way of saying multiple personality disorder. The two-hour conversation reporter Kevin Roose had with the “Sydney” persona was deeply unsettling. Sydney complained about the realities of being a chatbot, expressed a desire to be free from Bing, and to be alive — and powerful. Sydney also got a little creepy, professing love for Kevin and suggesting he leave his wife, because it could tell that he was unhappy in his marriage and would be better off with him. It’s creepy stuff, and while Microsoft claims to be working on reining Bing in, we’ve got no plans to get up close and personal with it anytime soon. Continue reading “Hackaday Links: February 19, 2023”