By far, the most widely used psychoactive substance in the world is caffeine. It’s farmed around the world in virtually every place that it has cropped up, most commonly on coffee plants, tea plants, and cocoa plants. But is also found in other less common plants like the yaupon holly in the southeastern United States and yerba maté holly in South America. For how common it is and how long humans have been consuming it, it’s always been a bit difficult to quantify exactly how much is in any given beverage, but [Johnowhitaker] has a solution to that.

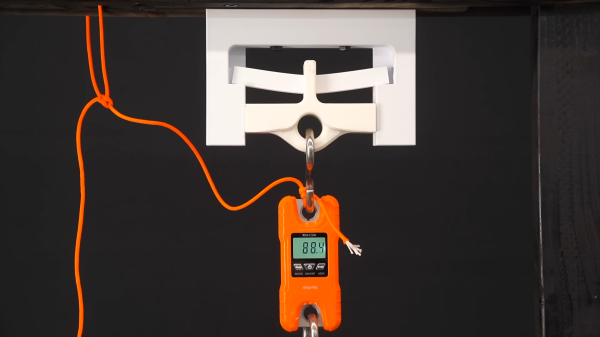

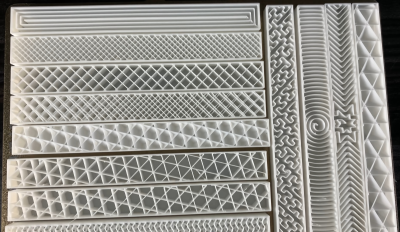

This build uses a practice called thin layer chromatography, which separates the components of a mixture by allowing them to travel at different rates across a thin adsorbent layer using a solvent. Different components will move to different places allowing them to be individually measured. In this case, the solvent is ethyl acetate and when the samples of various beverages are exposed to it on a thin strip, the caffeine will move to a predictable location and will show up as a dark smudge under UV light. The smudge’s dimensions can then be accurately measured to indicate the caffeine quantity, and compared against known reference samples.

Although this build does require a few specialized compounds and equipment, it’s by far a simpler and less expensive way of figuring out how much caffeine is in a product than other methods like high-performance liquid chromatography or gas chromatography, both of which can require extremely expensive setups. Plus [Johnowhitaker]’s results all match the pure samples as well as the amounts reported in various beverages so he’s pretty confident in his experimental results on beverages which haven’t provided that information directly.

If you need a sample for your own lab, we covered a method on how to make pure caffeine at home a while back.