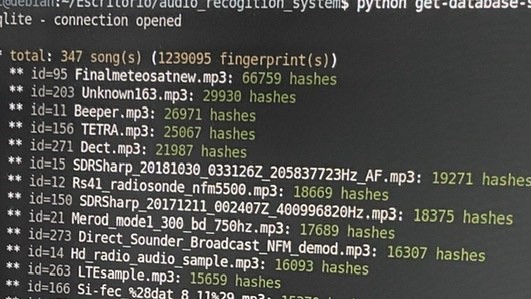

In the realm of computer science, it’s hard to go too far without encountering hashing or hash functions. The concept appears throughout security, from encryption to password storage to crypto, and more generally whenever large or complex data must be efficiently mapped to a smaller, fixed-size set. Hashing makes the process of looking for data much faster for a computer than performing a search and can be incredibly powerful when mastered. [Malte] did some investigation into hash functions and seems to have found a method called Fibonacci hashing that not only seems to have been largely forgotten but which speeds up this lookup process even further.

In a typical hashing operation, the data is transformed in some way, with part of this new value used to store it in a specific location. That second step is often done with an integer modulo function. But the problem with any hashing operation is that two different pieces of data end up with the same value after the modulo operation is performed, resulting in these two different pieces of data being placed at the same point. The Fibonacci hash, on the other hand, uses the golden ratio rather than the modulo function to map the final location of the data, resulting in many fewer instances of collisions like these while also being much faster. It also appears to do a better job of using the smaller fixed-size set more evenly as a consequence of being based around Fibonacci numbers, just as long as the input data doesn’t have a large number of Fibonacci numbers themselves.

Going through the math that [Malte] goes over in his paper shows that, at least as far as performing the mapping part of a hash function, the Fibonacci hash performs much better than integer modulo. Some of the comments mention that it’s a specific type of a more general method called multiplicative hashing. For those using hash functions in their code it might be worth taking a look at either way, and [Malte] admits to not knowing everything about this branch of computer science as well but still goes into an incredible amount of depth about this specific method. If you’re more of a newcomer to this topic, take a look at this person who put an enormous bounty on a bitcoin wallet which shows why reverse-hashing is so hard.